Stein ICP for Uncertainty Estimation in Point Cloud Matching

Paper and Code

Jun 07, 2021

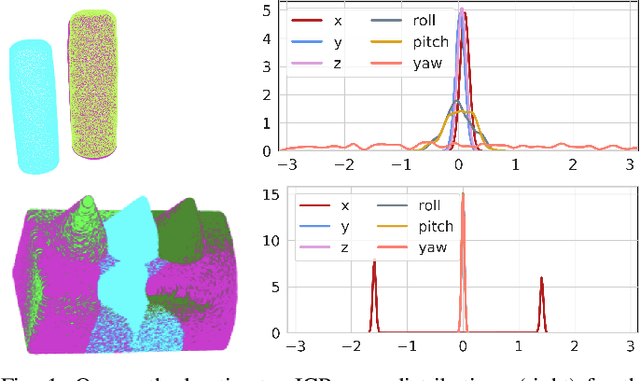

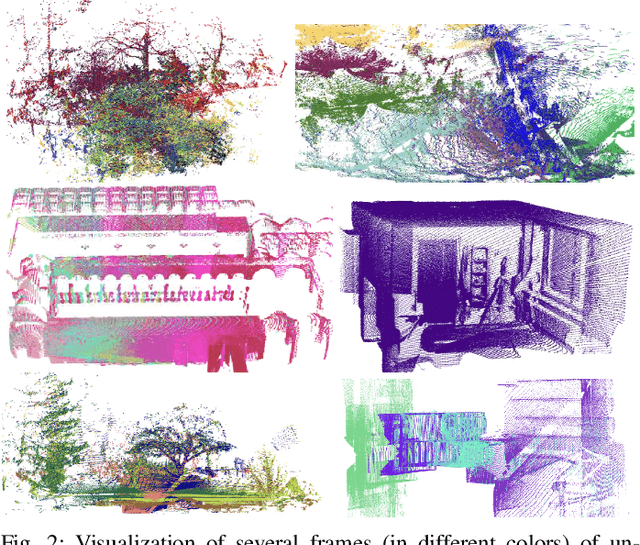

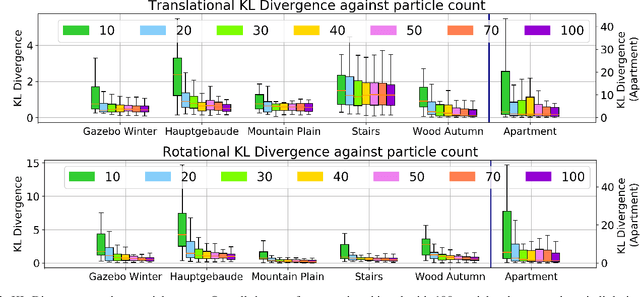

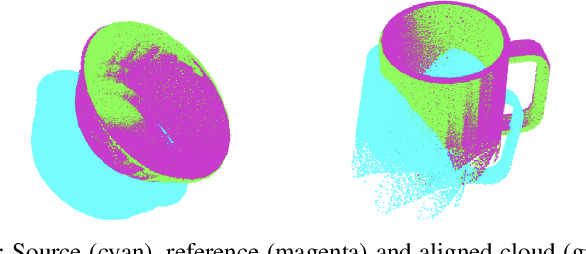

Quantification of uncertainty in point cloud matching is critical in many tasks such as pose estimation, sensor fusion, and grasping. Iterative closest point (ICP) is a commonly used pose estimation algorithm which provides a point estimate of the transformation between two point clouds. There are many sources of uncertainty in this process that may arise due to sensor noise, ambiguous environment, and occlusion. However, for safety critical problems such as autonomous driving, a point estimate of the pose transformation is not sufficient as it does not provide information about the multiple solutions. Current probabilistic ICP methods usually do not capture all sources of uncertainty and may provide unreliable transformation estimates which can have a detrimental effect in state estimation or decision making tasks that use this information. In this work we propose a new algorithm to align two point clouds that can precisely estimate the uncertainty of ICP's transformation parameters. We develop a Stein variational inference framework with gradient based optimization of ICP's cost function. The method provides a non-parametric estimate of the transformation, can model complex multi-modal distributions, and can be effectively parallelized on a GPU. Experiments using 3D kinect data as well as sparse indoor/outdoor LiDAR data show that our method is capable of efficiently producing accurate pose uncertainty estimates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge