Stability of Deep Neural Networks via discrete rough paths

Paper and Code

Jan 19, 2022

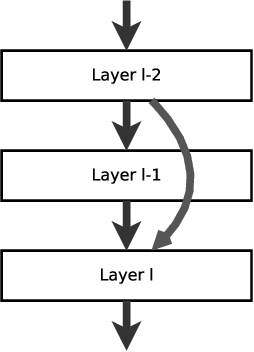

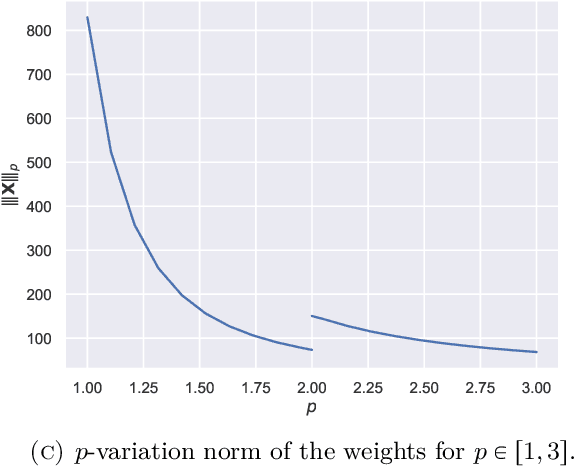

Using rough path techniques, we provide a priori estimates for the output of Deep Residual Neural Networks in terms of both the input data and the (trained) network weights. As trained network weights are typically very rough when seen as functions of the layer, we propose to derive stability bounds in terms of the total $p$-variation of trained weights for any $p\in[1,3]$. Unlike the $C^1$-theory underlying the neural ODE literature, our estimates remain bounded even in the limiting case of weights behaving like Brownian motions, as suggested in [arXiv:2105.12245]. Mathematically, we interpret residual neural network as solutions to (rough) difference equations, and analyse them based on recent results of discrete time signatures and rough path theory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge