SS-SAM : Stochastic Scheduled Sharpness-Aware Minimization for Efficiently Training Deep Neural Networks

Paper and Code

Mar 18, 2022

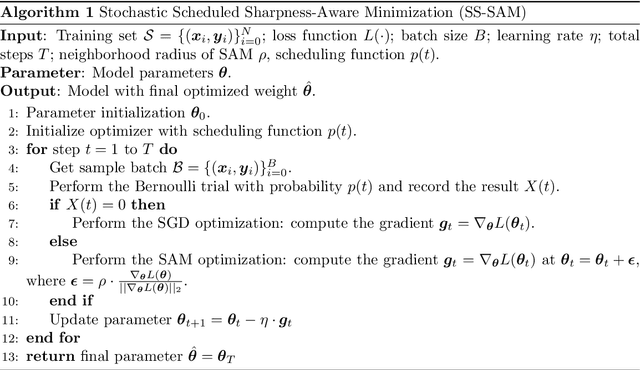

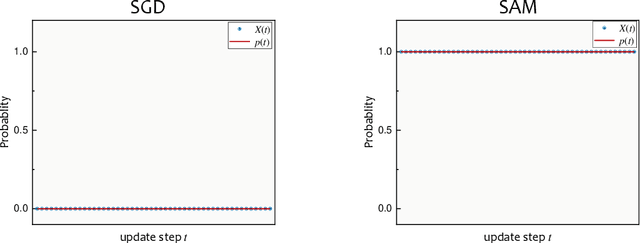

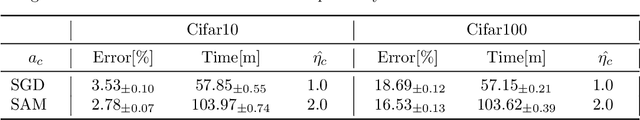

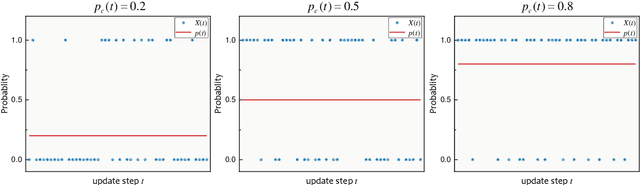

By driving optimizers to converge to flat minima, sharpness-aware minimization (SAM) has shown the power to improve the model generalization. However, SAM requires to perform two forward-backward propagations for one parameter update, which largely burdens the practical computation. In this paper, we propose a novel and efficient training scheme, called Stochastic Scheduled SAM (SS-SAM). Specifically, in SS-SAM, the optimizer is arranged by a predefined scheduling function to perform a random trial at each update step, which would randomly select to perform the SGD optimization or the SAM optimization. In this way, the overall count of propagation pair could be largely reduced. Then, we empirically investigate four typical types of scheduling functions, and demonstrates the computational efficiency and their impact on model performance respectively. We show that with proper scheduling functions, models could be trained to achieve comparable or even better performance with much lower computation cost compared to models trained with only SAM training scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge