Split to Be Slim: An Overlooked Redundancy in Vanilla Convolution

Paper and Code

Jun 22, 2020

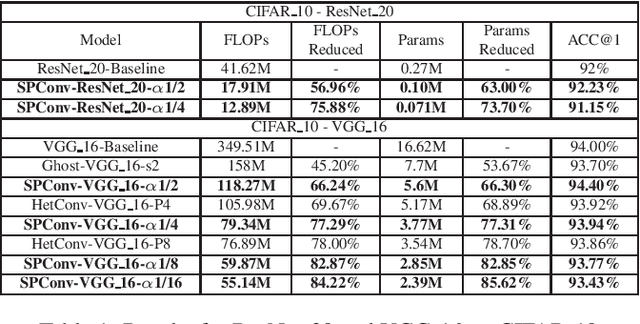

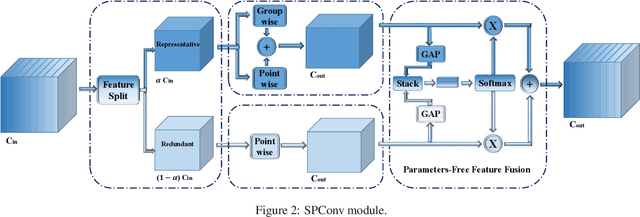

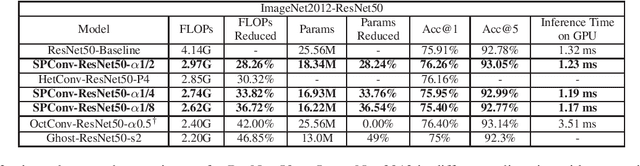

Many effective solutions have been proposed to reduce the redundancy of models for inference acceleration. Nevertheless, common approaches mostly focus on eliminating less important filters or constructing efficient operations, while ignoring the pattern redundancy in feature maps. We reveal that many feature maps within a layer share similar but not identical patterns. However, it is difficult to identify if features with similar patterns are redundant or contain essential details. Therefore, instead of directly removing uncertain redundant features, we propose a \textbf{sp}lit based \textbf{conv}olutional operation, namely SPConv, to tolerate features with similar patterns but require less computation. Specifically, we split input feature maps into the representative part and the uncertain redundant part, where intrinsic information is extracted from the representative part through relatively heavy computation while tiny hidden details in the uncertain redundant part are processed with some light-weight operation. To recalibrate and fuse these two groups of processed features, we propose a parameters-free feature fusion module. Moreover, our SPConv is formulated to replace the vanilla convolution in a plug-and-play way. Without any bells and whistles, experimental results on benchmarks demonstrate SPConv-equipped networks consistently outperform state-of-the-art baselines in both accuracy and inference time on GPU, with FLOPs and parameters dropped sharply.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge