Spiking Neural Networks for Visual Place Recognition via Weighted Neuronal Assignments

Paper and Code

Sep 14, 2021

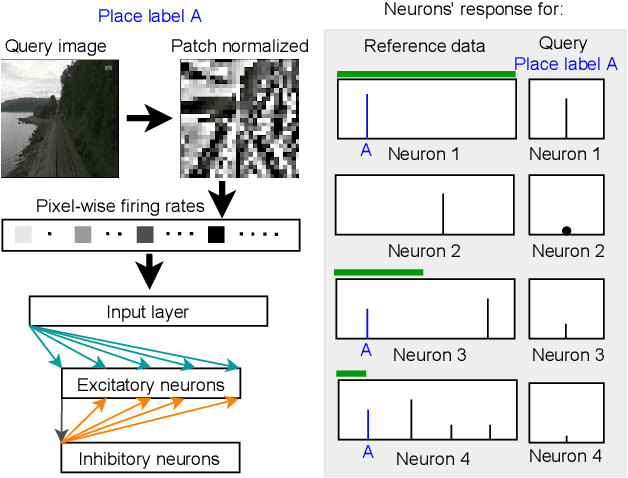

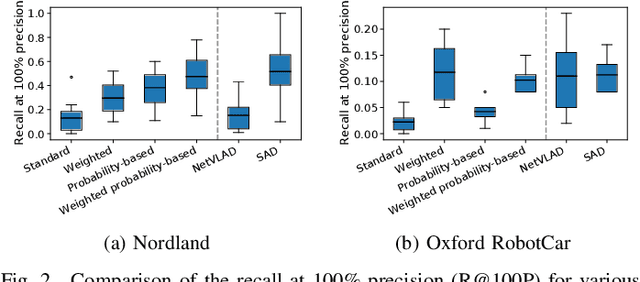

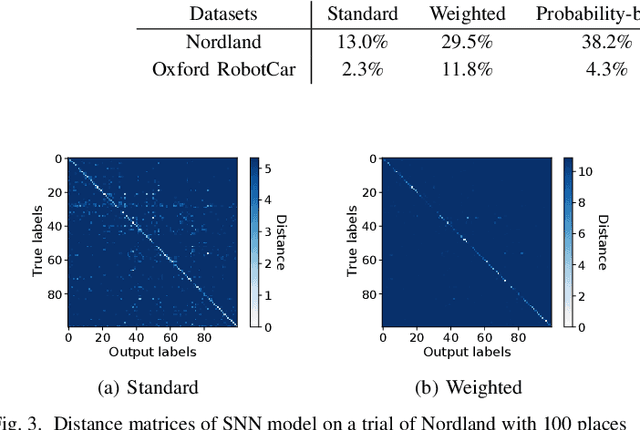

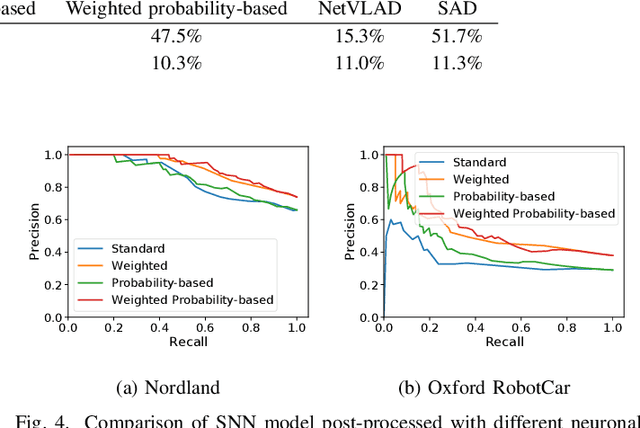

Spiking neural networks (SNNs) offer both compelling potential advantages, including energy efficiency and low latencies, and challenges including the non-differentiable nature of event spikes. Much of the initial research in this area has converted deep neural networks to equivalent SNNs, but this conversion approach potentially negates some of the potential advantages of SNN-based approaches developed from scratch. One promising area for high performance SNNs is template matching and image recognition. This research introduces the first high performance SNN for the Visual Place Recognition (VPR) task: given a query image, the SNN has to find the closest match out of a list of reference images. At the core of this new system is a novel assignment scheme that implements a form of ambiguity-informed salience, by up-weighting single-place-encoding neurons and down-weighting "ambiguous" neurons that respond to multiple different reference places. In a range of experiments on the challenging Oxford RobotCar and Nordland datasets, we show that our SNN achieves comparable VPR performance to state-of-the-art and classical techniques, and degrades gracefully in performance with an increasing number of reference places. Our results provide a significant milestone towards SNNs that can provide robust, energy-efficient and low latency robot localization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge