Spike Calibration: Fast and Accurate Conversion of Spiking Neural Network for Object Detection and Segmentation

Paper and Code

Jul 06, 2022

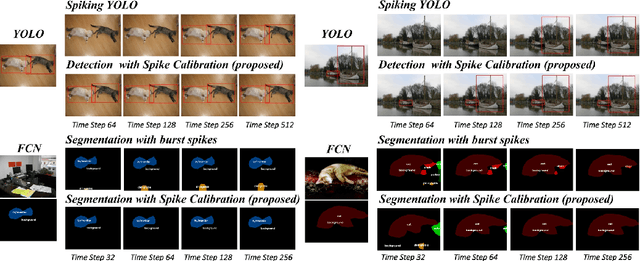

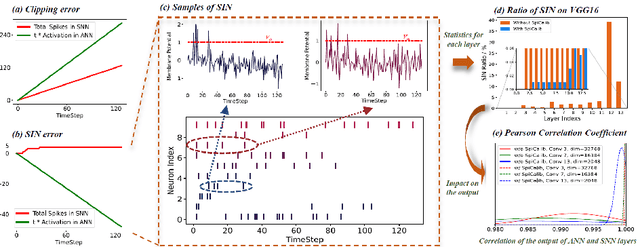

Spiking neural network (SNN) has been attached to great importance due to the properties of high biological plausibility and low energy consumption on neuromorphic hardware. As an efficient method to obtain deep SNN, the conversion method has exhibited high performance on various large-scale datasets. However, it typically suffers from severe performance degradation and high time delays. In particular, most of the previous work focuses on simple classification tasks while ignoring the precise approximation to ANN output. In this paper, we first theoretically analyze the conversion errors and derive the harmful effects of time-varying extremes on synaptic currents. We propose the Spike Calibration (SpiCalib) to eliminate the damage of discrete spikes to the output distribution and modify the LIPooling to allow conversion of the arbitrary MaxPooling layer losslessly. Moreover, Bayesian optimization for optimal normalization parameters is proposed to avoid empirical settings. The experimental results demonstrate the state-of-the-art performance on classification, object detection, and segmentation tasks. To the best of our knowledge, this is the first time to obtain SNN comparable to ANN on these tasks simultaneously. Moreover, we only need 1/50 inference time of the previous work on the detection task and can achieve the same performance under 0.492$\times$ energy consumption of ANN on the segmentation task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge