Sparsifying Sparse Representations for Passage Retrieval by Top-$k$ Masking

Paper and Code

Dec 17, 2021

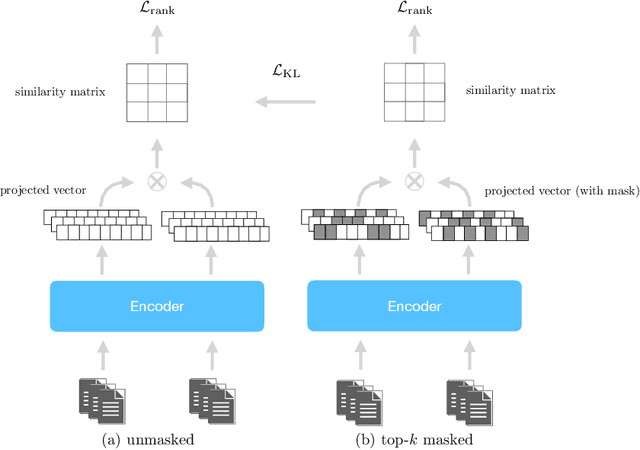

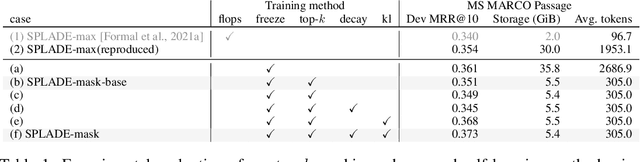

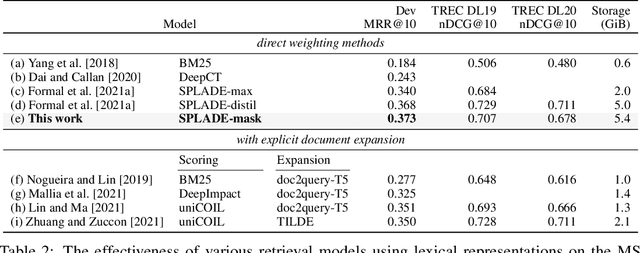

Sparse lexical representation learning has demonstrated much progress in improving passage retrieval effectiveness in recent models such as DeepImpact, uniCOIL, and SPLADE. This paper describes a straightforward yet effective approach for sparsifying lexical representations for passage retrieval, building on SPLADE by introducing a top-$k$ masking scheme to control sparsity and a self-learning method to coax masked representations to mimic unmasked representations. A basic implementation of our model is competitive with more sophisticated approaches and achieves a good balance between effectiveness and efficiency. The simplicity of our methods opens the door for future explorations in lexical representation learning for passage retrieval.

* 8 pages, 1 figure

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge