SpaceE: Knowledge Graph Embedding by Relational Linear Transformation in the Entity Space

Paper and Code

Apr 21, 2022

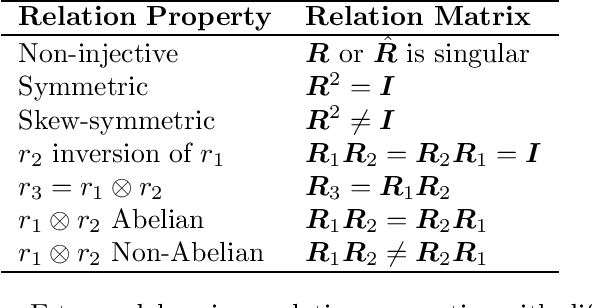

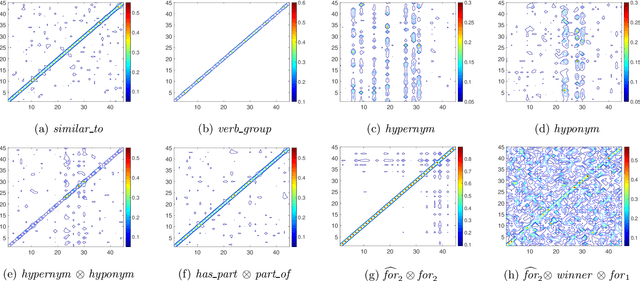

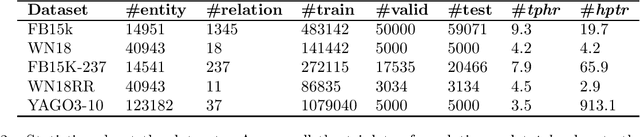

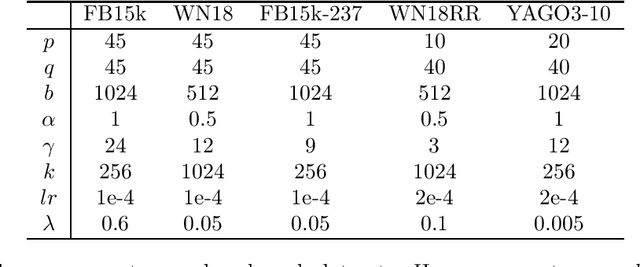

Translation distance based knowledge graph embedding (KGE) methods, such as TransE and RotatE, model the relation in knowledge graphs as translation or rotation in the vector space. Both translation and rotation are injective; that is, the translation or rotation of different vectors results in different results. In knowledge graphs, different entities may have a relation with the same entity; for example, many actors starred in one movie. Such a non-injective relation pattern cannot be well modeled by the translation or rotation operations in existing translation distance based KGE methods. To tackle the challenge, we propose a translation distance-based KGE method called SpaceE to model relations as linear transformations. The proposed SpaceE embeds both entities and relations in knowledge graphs as matrices and SpaceE naturally models non-injective relations with singular linear transformations. We theoretically demonstrate that SpaceE is a fully expressive model with the ability to infer multiple desired relation patterns, including symmetry, skew-symmetry, inversion, Abelian composition, and non-Abelian composition. Experimental results on link prediction datasets illustrate that SpaceE substantially outperforms many previous translation distance based knowledge graph embedding methods, especially on datasets with many non-injective relations. The code is available based on the PaddlePaddle deep learning platform https://www.paddlepaddle.org.cn.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge