SPA-VAE: Similar-Parts-Assignment for Unsupervised 3D Point Cloud Generation

Paper and Code

Mar 15, 2022

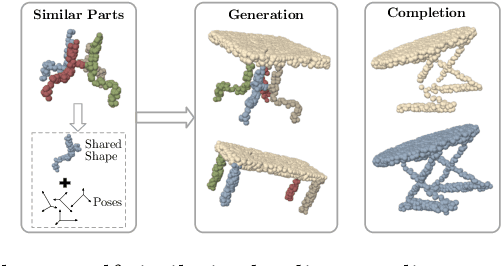

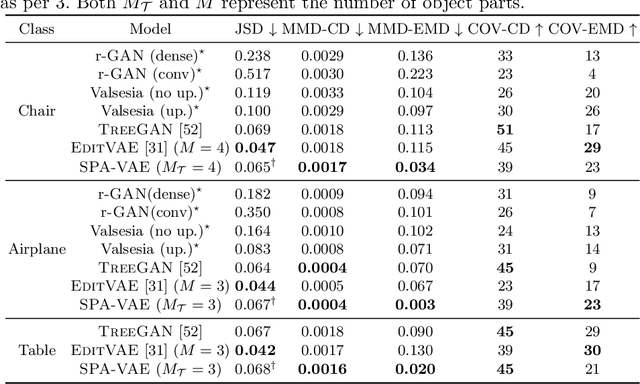

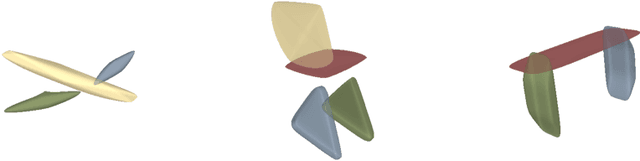

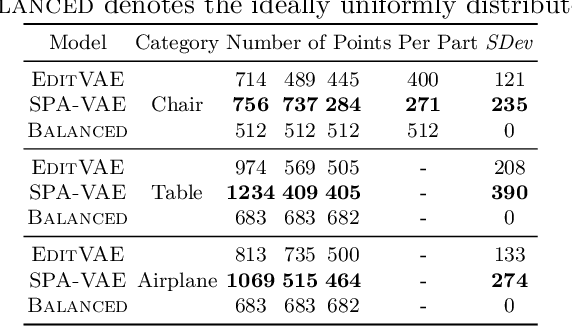

This paper addresses the problem of unsupervised parts-aware point cloud generation with learned parts-based self-similarity. Our SPA-VAE infers a set of latent canonical candidate shapes for any given object, along with a set of rigid body transformations for each such candidate shape to one or more locations within the assembled object. In this way, noisy samples on the surface of, say, each leg of a table, are effectively combined to estimate a single leg prototype. When parts-based self-similarity exists in the raw data, sharing data among parts in this way confers numerous advantages: modeling accuracy, appropriately self-similar generative outputs, precise in-filling of occlusions, and model parsimony. SPA-VAE is trained end-to-end using a variational Bayesian approach which uses the Gumbel-softmax trick for the shared part assignments, along with various novel losses to provide appropriate inductive biases. Quantitative and qualitative analyses on ShapeNet demonstrate the advantage of SPA-VAE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge