Soft Robots Learn to Crawl: Jointly Optimizing Design and Control with Sim-to-Real Transfer

Paper and Code

Feb 09, 2022

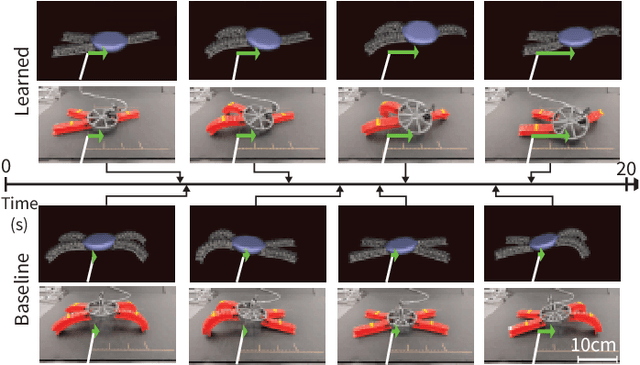

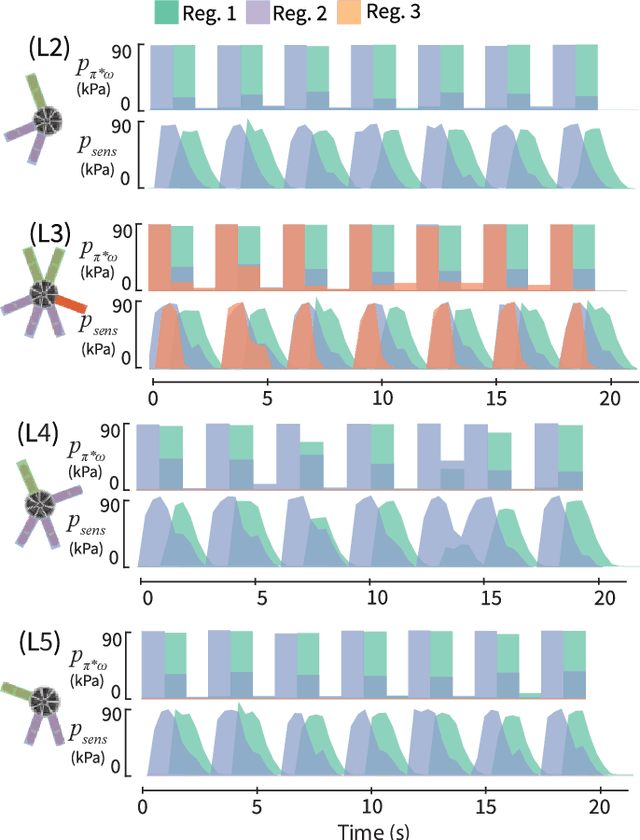

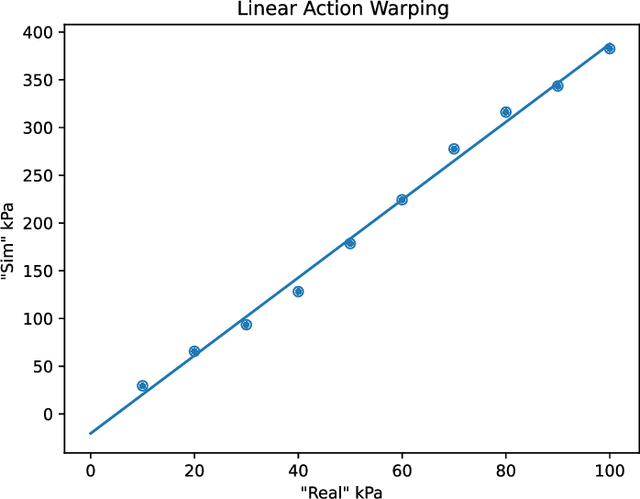

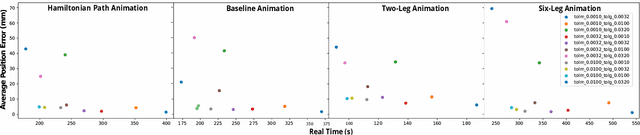

This work provides a complete framework for the simulation, co-optimization, and sim-to-real transfer of the design and control of soft legged robots. The compliance of soft robots provides a form of "mechanical intelligence" -- the ability to passively exhibit behaviors that would otherwise be difficult to program. Exploiting this capacity requires careful consideration of the coupling between mechanical design and control. Co-optimization provides a promising means to generate sophisticated soft robots by reasoning over this coupling. However, the complex nature of soft robot dynamics makes it difficult to provide a simulation environment that is both sufficiently accurate to allow for sim-to-real transfer, while also being fast enough for contemporary co-optimization algorithms. In this work, we show that finite element simulation combined with recent model order reduction techniques provide both the efficiency and the accuracy required to successfully learn effective soft robot design-control pairs that transfer to reality. We propose a reinforcement learning-based framework for co-optimization and demonstrate successful optimization, construction, and zero-shot sim-to-real transfer of several soft crawling robots. Our learned robot outperforms an expert-designed crawling robot, showing that our approach can generate novel, high-performing designs even in well-understood domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge