SMGRL: A Scalable Multi-resolution Graph Representation Learning Framework

Paper and Code

Jan 29, 2022

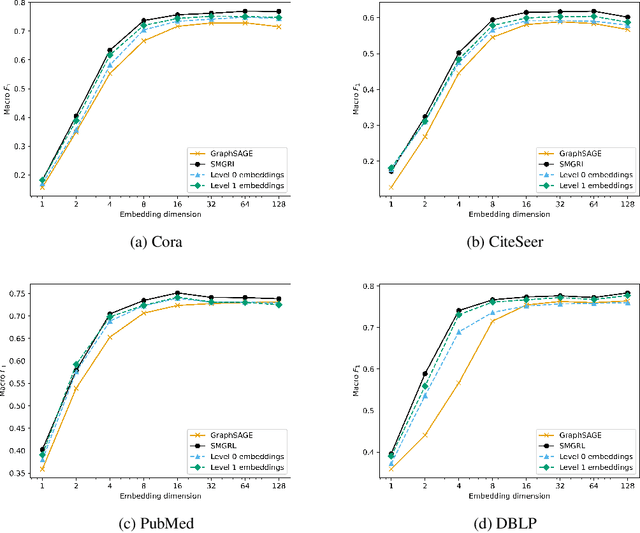

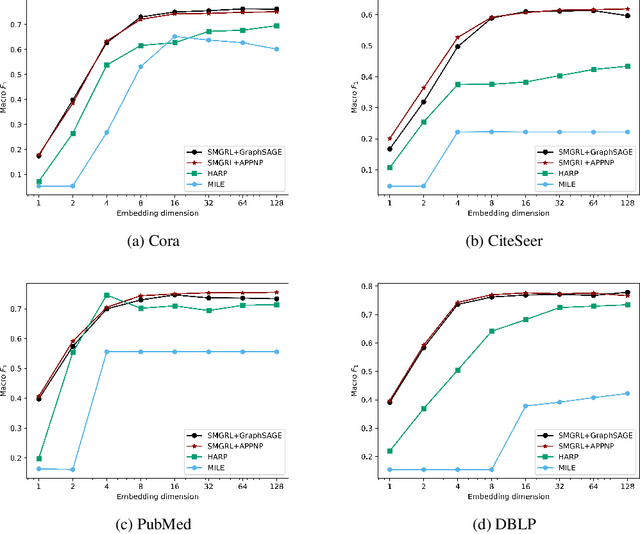

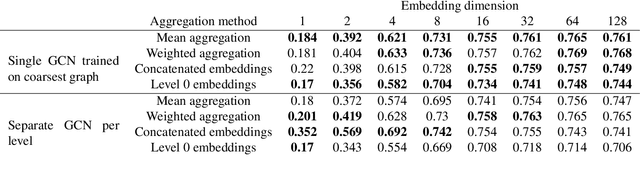

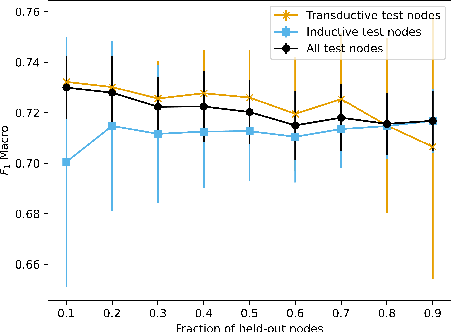

Graph convolutional networks (GCNs) allow us to learn topologically-aware node embeddings, which can be useful for classification or link prediction. However, by construction, they lack positional awareness and are unable to capture long-range dependencies without adding additional layers -- which in turn leads to over-smoothing and increased time and space complexity. Further, the complex dependencies between nodes make mini-batching challenging, limiting their applicability to large graphs. This paper proposes a Scalable Multi-resolution Graph Representation Learning (SMGRL) framework that enables us to learn multi-resolution node embeddings efficiently. Our framework is model-agnostic and can be applied to any existing GCN model. We dramatically reduce training costs by training only on a reduced-dimension coarsening of the original graph, then exploit self-similarity to apply the resulting algorithm at multiple resolutions. Inference of these multi-resolution embeddings can be distributed across multiple machines to reduce computational and memory requirements further. The resulting multi-resolution embeddings can be aggregated to yield high-quality node embeddings that capture both long- and short-range dependencies between nodes. Our experiments show that this leads to improved classification accuracy, without incurring high computational costs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge