Small Transformers Compute Universal Metric Embeddings

Paper and Code

Sep 14, 2022

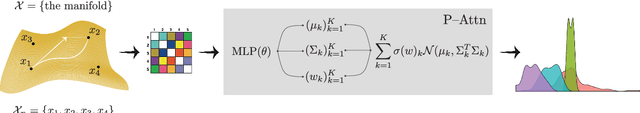

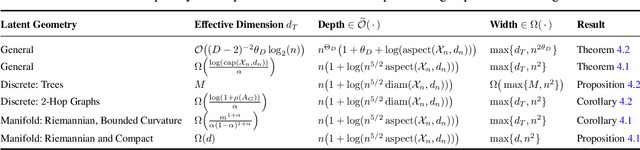

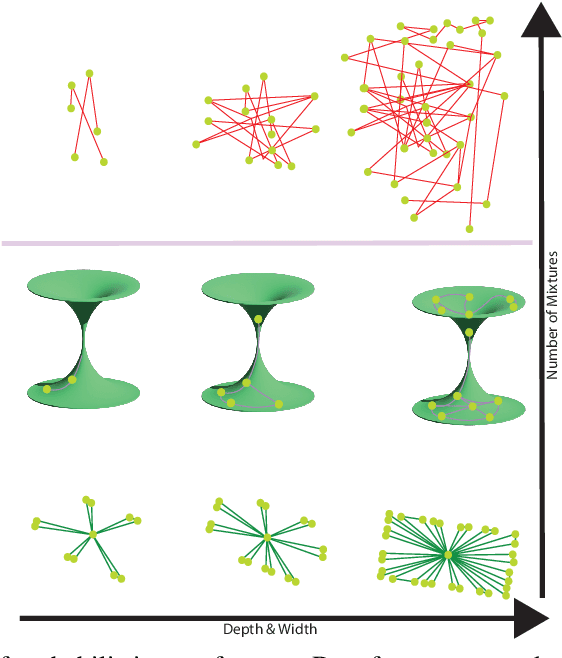

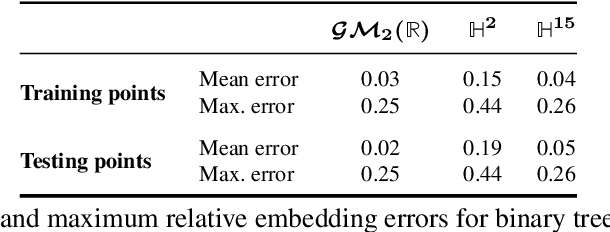

We study representations of data from an arbitrary metric space $\mathcal{X}$ in the space of univariate Gaussian mixtures with a transport metric (Delon and Desolneux 2020). We derive embedding guarantees for feature maps implemented by small neural networks called \emph{probabilistic transformers}. Our guarantees are of memorization type: we prove that a probabilistic transformer of depth about $n\log(n)$ and width about $n^2$ can bi-H\"{o}lder embed any $n$-point dataset from $\mathcal{X}$ with low metric distortion, thus avoiding the curse of dimensionality. We further derive probabilistic bi-Lipschitz guarantees which trade off the amount of distortion and the probability that a randomly chosen pair of points embeds with that distortion. If $\mathcal{X}$'s geometry is sufficiently regular, we obtain stronger, bi-Lipschitz guarantees for all points in the dataset. As applications we derive neural embedding guarantees for datasets from Riemannian manifolds, metric trees, and certain types of combinatorial graphs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge