SLIDE: a surrogate fairness constraint to ensure fairness consistency

Paper and Code

Feb 07, 2022

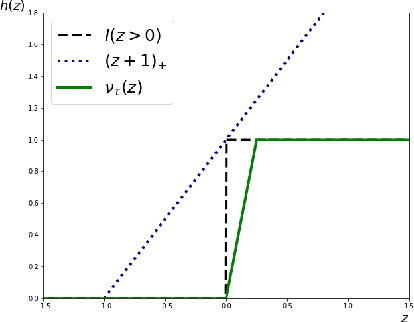

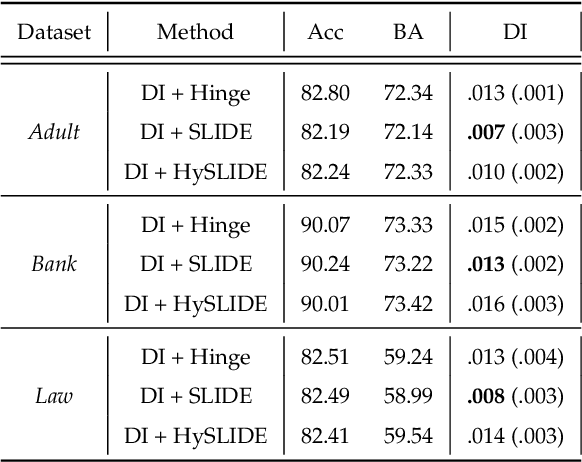

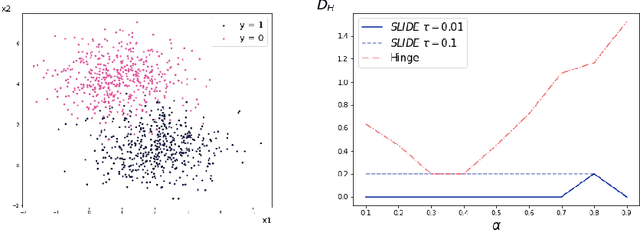

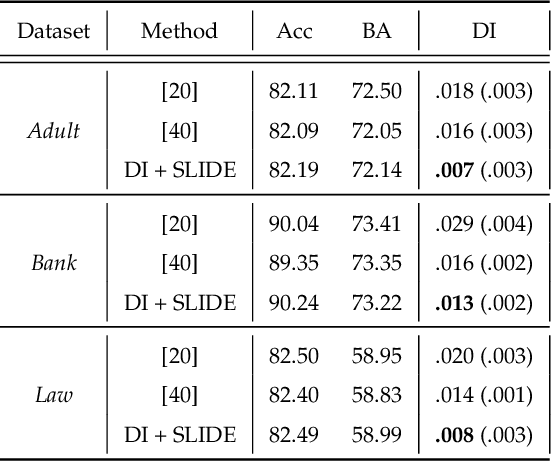

As they have a vital effect on social decision makings, AI algorithms should be not only accurate and but also fair. Among various algorithms for fairness AI, learning a prediction model by minimizing the empirical risk (e.g., cross-entropy) subject to a given fairness constraint has received much attention. To avoid computational difficulty, however, a given fairness constraint is replaced by a surrogate fairness constraint as the 0-1 loss is replaced by a convex surrogate loss for classification problems. In this paper, we investigate the validity of existing surrogate fairness constraints and propose a new surrogate fairness constraint called SLIDE, which is computationally feasible and asymptotically valid in the sense that the learned model satisfies the fairness constraint asymptotically and achieves a fast convergence rate. Numerical experiments confirm that the SLIDE works well for various benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge