SliceMatch: Geometry-guided Aggregation for Cross-View Pose Estimation

Paper and Code

Dec 05, 2022

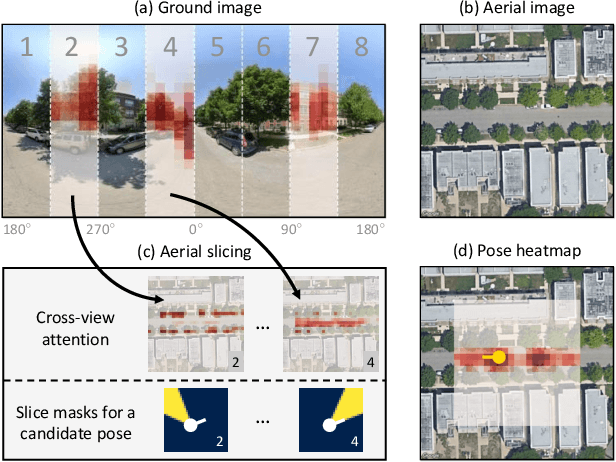

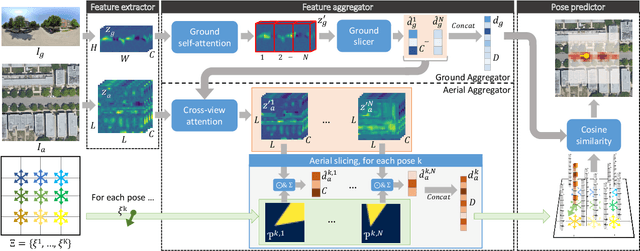

This work addresses cross-view camera pose estimation, i.e., determining the 3-DoF camera pose of a given ground-level image w.r.t. an aerial image of the local area. We propose SliceMatch, which consists of ground and aerial feature extractors, feature aggregators, and a pose predictor. The feature extractors extract dense features from the ground and aerial images. Given a set of candidate camera poses, the feature aggregators construct a single ground descriptor and a set of rotational equivariant pose-dependent aerial descriptors. Notably, our novel aerial feature aggregator has a cross-view attention module for ground-view guided aerial feature selection, and utilizes the geometric projection of the ground camera's viewing frustum on the aerial image to pool features. The efficient construction of aerial descriptors is achieved by using precomputed masks and by re-assembling the aerial descriptors for rotated poses. SliceMatch is trained using contrastive learning and pose estimation is formulated as a similarity comparison between the ground descriptor and the aerial descriptors. SliceMatch outperforms the state-of-the-art by 19% and 62% in median localization error on the VIGOR and KITTI datasets, with 3x FPS of the fastest baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge