Skip and Skip: Segmenting Medical Images with Prompts

Paper and Code

Jun 21, 2024

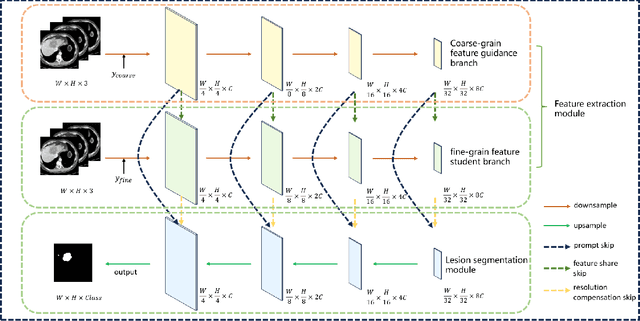

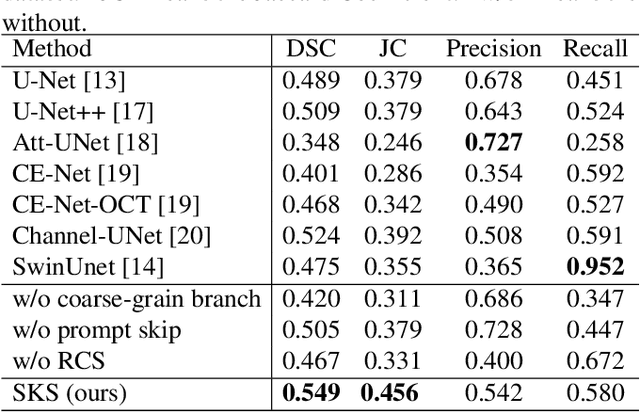

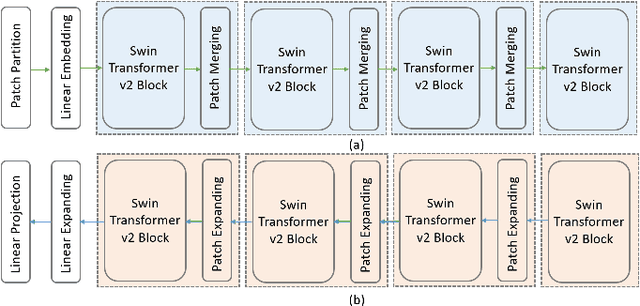

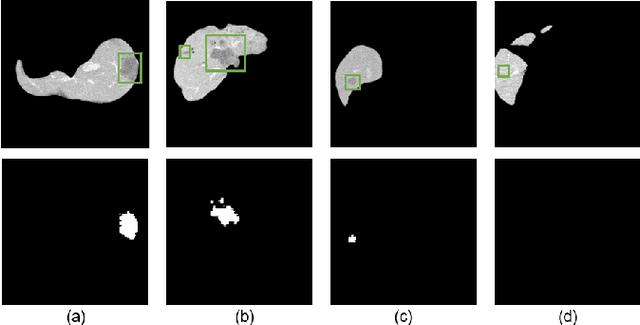

Most medical image lesion segmentation methods rely on hand-crafted accurate annotations of the original image for supervised learning. Recently, a series of weakly supervised or unsupervised methods have been proposed to reduce the dependence on pixel-level annotations. However, these methods are essentially based on pixel-level annotation, ignoring the image-level diagnostic results of the current massive medical images. In this paper, we propose a dual U-shaped two-stage framework that utilizes image-level labels to prompt the segmentation. In the first stage, we pre-train a classification network with image-level labels, which is used to obtain the hierarchical pyramid features and guide the learning of downstream branches. In the second stage, we feed the hierarchical features obtained from the classification branch into the downstream branch through short-skip and long-skip and get the lesion masks under the supervised learning of pixel-level labels. Experiments show that our framework achieves better results than networks simply using pixel-level annotations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge