Skew Probabilistic Neural Networks for Learning from Imbalanced Data

Paper and Code

Dec 10, 2023

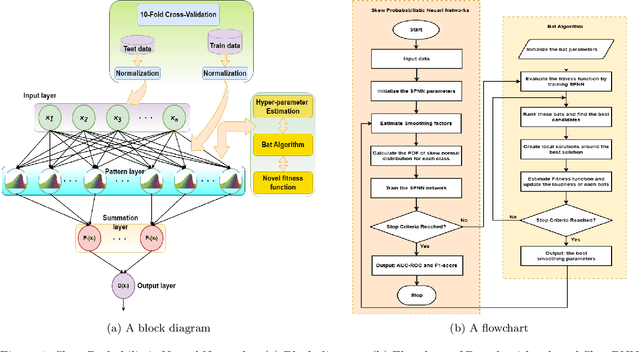

Real-world datasets often exhibit imbalanced data distribution, where certain class levels are severely underrepresented. In such cases, traditional pattern classifiers have shown a bias towards the majority class, impeding accurate predictions for the minority class. This paper introduces an imbalanced data-oriented approach using probabilistic neural networks (PNNs) with a skew normal probability kernel to address this major challenge. PNNs are known for providing probabilistic outputs, enabling quantification of prediction confidence and uncertainty handling. By leveraging the skew normal distribution, which offers increased flexibility, particularly for imbalanced and non-symmetric data, our proposed Skew Probabilistic Neural Networks (SkewPNNs) can better represent underlying class densities. To optimize the performance of the proposed approach on imbalanced datasets, hyperparameter fine-tuning is imperative. To this end, we employ a population-based heuristic algorithm, Bat optimization algorithms, for effectively exploring the hyperparameter space. We also prove the statistical consistency of the density estimates which suggests that the true distribution will be approached smoothly as the sample size increases. Experimental simulations have been conducted on different synthetic datasets, comparing various benchmark-imbalanced learners. Our real-data analysis shows that SkewPNNs substantially outperform state-of-the-art machine learning methods for both balanced and imbalanced datasets in most experimental settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge