Sinogram upsampling using Primal-Dual UNet for undersampled CT and radial MRI reconstruction

Paper and Code

Dec 26, 2021

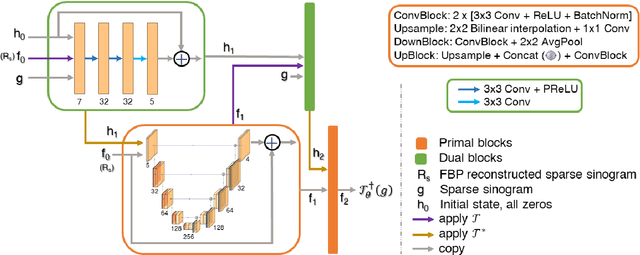

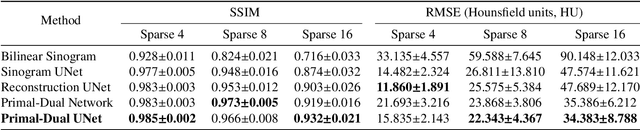

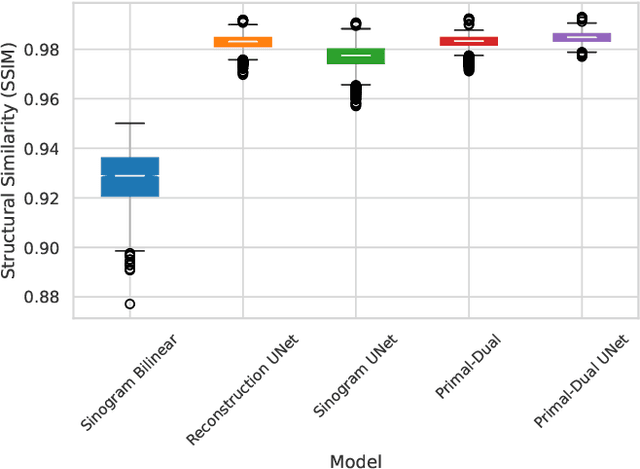

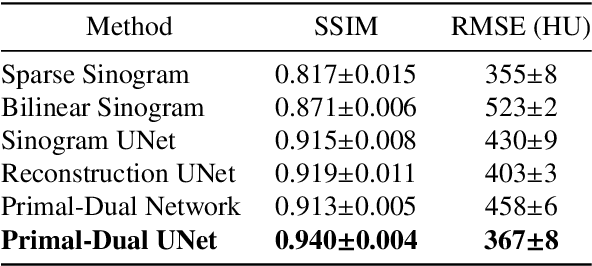

CT and MRI are two widely used clinical imaging modalities for non-invasive diagnosis. However, both of these modalities come with certain problems. CT uses harmful ionising radiation, and MRI suffers from slow acquisition speed. Both problems can be tackled by undersampling, such as sparse sampling. However, such undersampled data leads to lower resolution and introduces artefacts. Several techniques, including deep learning based methods, have been proposed to reconstruct such data. However, the undersampled reconstruction problem for these two modalities was always considered as two different problems and tackled separately by different research works. This paper proposes a unified solution for both sparse CT and undersampled radial MRI reconstruction, achieved by applying Fourier transform-based pre-processing on the radial MRI and then reconstructing both modalities using sinogram upsampling combined with filtered back-projection. The Primal-Dual network is a deep learning based method for reconstructing sparsely-sampled CT data. This paper introduces Primal-Dual UNet, which improves the Primal-Dual network in terms of accuracy and reconstruction speed. The proposed method resulted in an average SSIM of 0.932 while performing sparse CT reconstruction for fan-beam geometry with a sparsity level of 16, achieving a statistically significant improvement over the previous model, which resulted in 0.919. Furthermore, the proposed model resulted in 0.903 and 0.957 average SSIM while reconstructing undersampled brain and abdominal MRI data with an acceleration factor of 16 - statistically significant improvements over the original model, which resulted in 0.867 and 0.949. Finally, this paper shows that the proposed network not only improves the overall image quality, but also improves the image quality for the regions-of-interest; as well as generalises better in presence of a needle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge