Single-Shot Optical Neural Network

Paper and Code

May 18, 2022

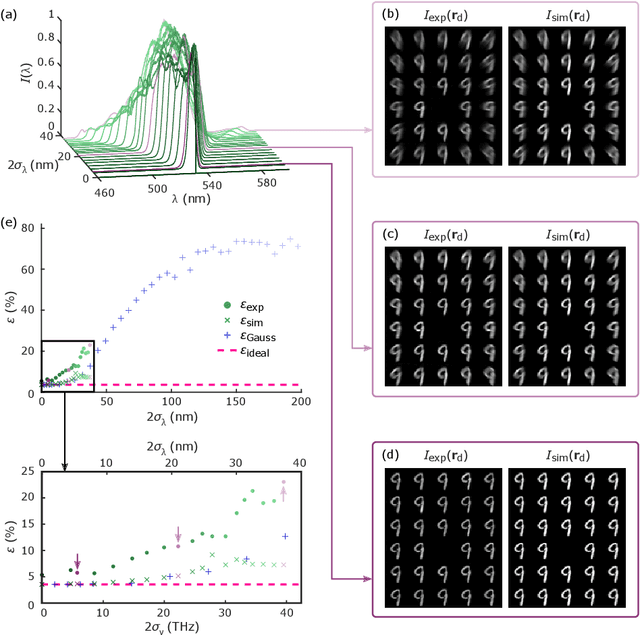

As deep neural networks (DNNs) grow to solve increasingly complex problems, they are becoming limited by the latency and power consumption of existing digital processors. 'Weight-stationary' analog optical and electronic hardware has been proposed to reduce the compute resources required by DNNs by eliminating expensive weight updates; however, with scalability limited to an input vector length $K$ of hundreds of elements. Here, we present a scalable, single-shot-per-layer weight-stationary optical processor that leverages the advantages of free-space optics for passive optical copying and large-scale distribution of an input vector and integrated optoelectronics for static, reconfigurable weighting and the nonlinearity. We propose an optimized near-term CMOS-compatible system with $K = 1,000$ and beyond, and we calculate its theoretical total latency ($\sim$10 ns), energy consumption ($\sim$10 fJ/MAC) and throughput ($\sim$petaMAC/s) per layer. We also experimentally test DNN classification accuracy with single-shot analog optical encoding, copying and weighting of the MNIST handwritten digit dataset in a proof-of-concept system, achieving 94.7% (similar to the ground truth accuracy of 96.3%) without retraining on the hardware or data preprocessing. Lastly, we determine the upper bound on throughput of our system ($\sim$0.9 exaMAC/s), set by the maximum optical bandwidth before significant loss of accuracy. This joint use of wide spectral and spatial bandwidths enables highly efficient computing for next-generation DNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge