Simplified Mamba with Disentangled Dependency Encoding for Long-Term Time Series Forecasting

Paper and Code

Aug 22, 2024

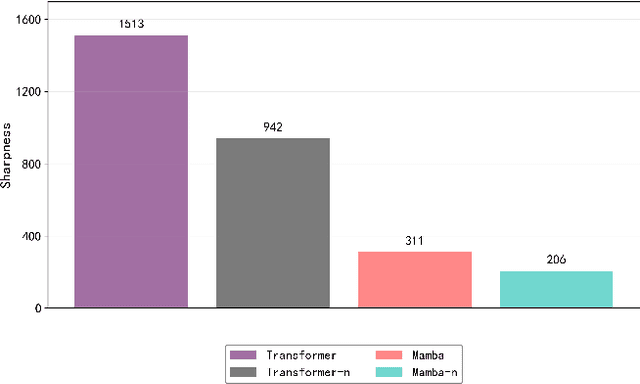

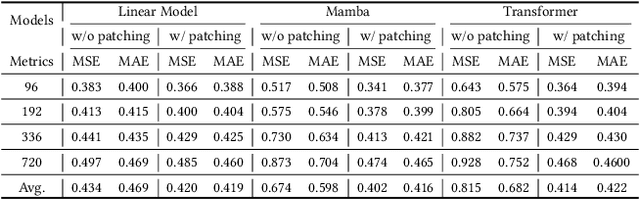

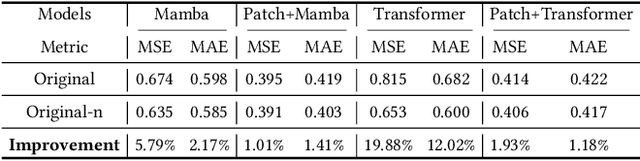

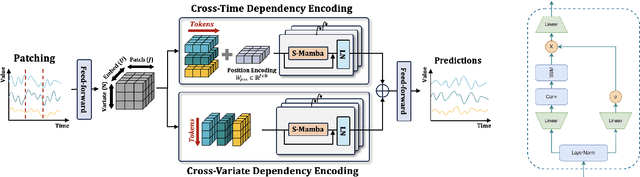

Recently many deep learning models have been proposed for Long-term Time Series Forecasting (LTSF). Based on previous literature, we identify three critical patterns that can improve forecasting accuracy: the order and semantic dependencies in time dimension as well as cross-variate dependency. However, little effort has been made to simultaneously consider order and semantic dependencies when developing forecasting models. Moreover, existing approaches utilize cross-variate dependency by mixing information from different timestamps and variates, which may introduce irrelevant or harmful cross-variate information to the time dimension and largely hinder forecasting performance. To overcome these limitations, we investigate the potential of Mamba for LTSF and discover two key advantages benefiting forecasting: (i) the selection mechanism makes Mamba focus on or ignore specific inputs and learn semantic dependency easily, and (ii) Mamba preserves order dependency by processing sequences recursively. After that, we empirically find that the non-linear activation used in Mamba is unnecessary for semantically sparse time series data. Therefore, we further propose SAMBA, a Simplified Mamba with disentangled dependency encoding. Specifically, we first remove the non-linearities of Mamba to make it more suitable for LTSF. Furthermore, we propose a disentangled dependency encoding strategy to endow Mamba with cross-variate dependency modeling capabilities while reducing the interference between time and variate dimensions. Extensive experimental results on seven real-world datasets demonstrate the effectiveness of SAMBA over state-of-the-art forecasting models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge