SiamMan: Siamese Motion-aware Network for Visual Tracking

Paper and Code

Jan 18, 2020

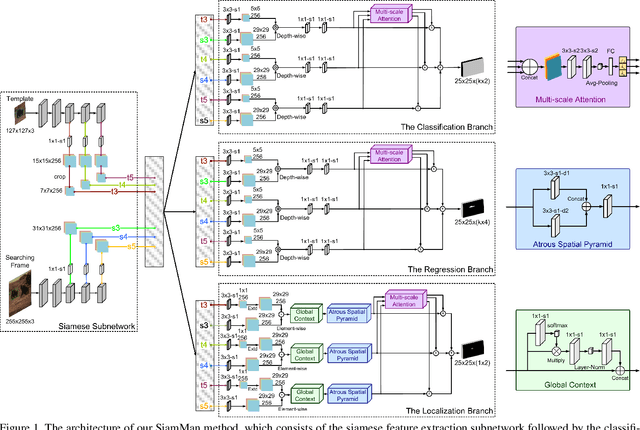

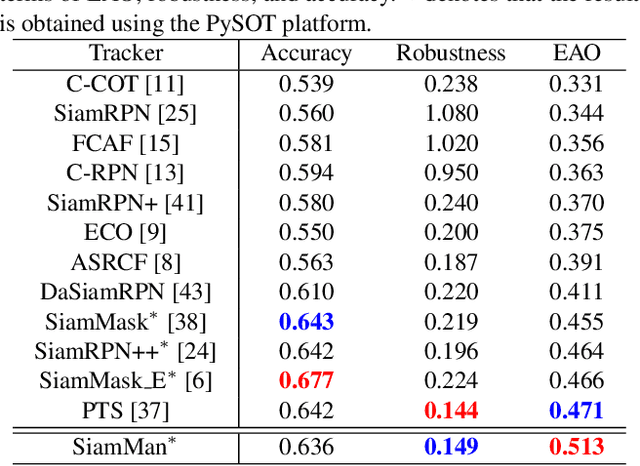

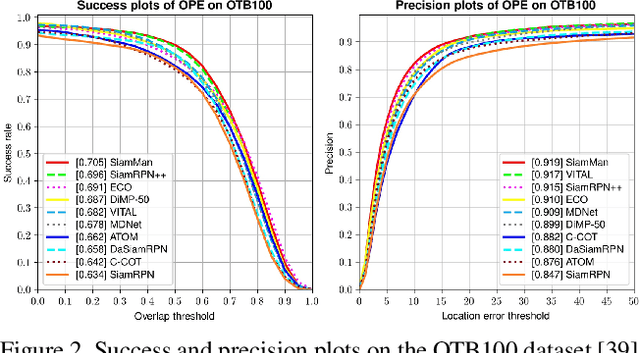

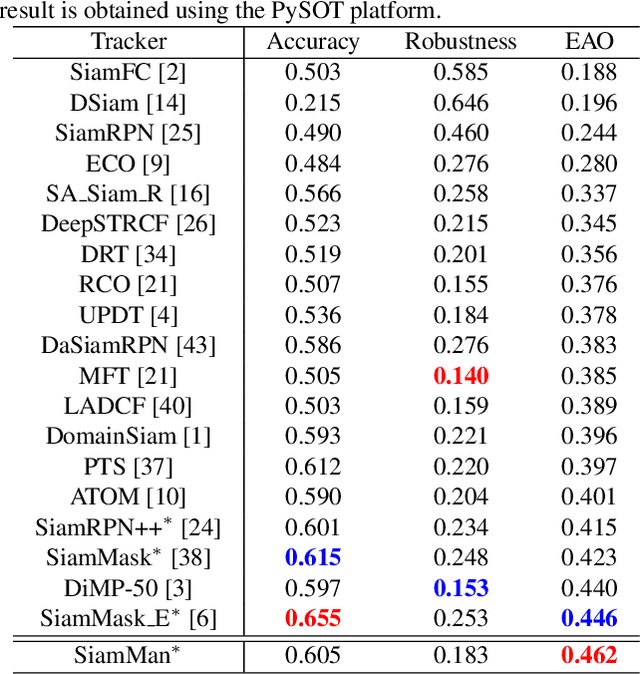

In this paper, we present a novel siamese motion-aware network (SiamMan) for visual tracking, which consists of the siamese feature extraction subnetwork, followed by the classification, regression, and localization branches in parallel. The classification branch is used to distinguish the foreground from background, and the regression branch is adopt to regress the bounding box of target. To reduce the impact of manually designed anchor boxes to adapt to different target motion patterns, we design the localization branch, which aims to coarsely localize the target to help the regression branch to generate accurate results. Meanwhile, we introduce the global context module into the localization branch to capture long-range dependency for more robustness in large displacement of target. In addition, we design a multi-scale learnable attention module to guide these three branches to exploit discriminative features for better performance. The whole network is trained offline in an end-to-end fashion with large-scale image pairs using the standard SGD algorithm with back-propagation. Extensive experiments on five challenging benchmarks, i.e., VOT2016, VOT2018, OTB100, UAV123 and LTB35, demonstrate that SiamMan achieves leading accuracy with high efficiency. Code can be found at https://isrc.iscas.ac.cn/gitlab/research/siamman.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge