Shared-unique Features and Task-aware Prioritized Sampling on Multi-task Reinforcement Learning

Paper and Code

Jun 02, 2024

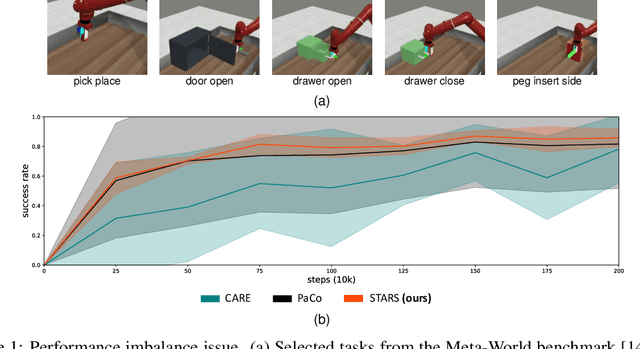

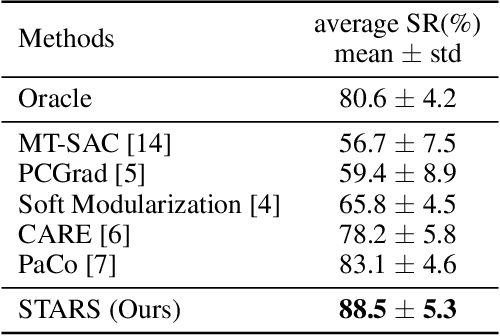

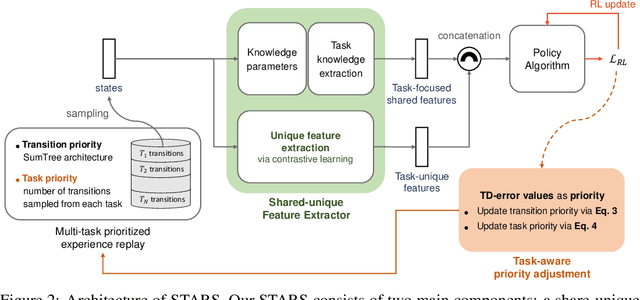

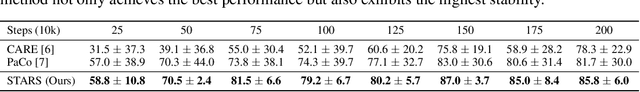

We observe that current state-of-the-art (SOTA) methods suffer from the performance imbalance issue when performing multi-task reinforcement learning (MTRL) tasks. While these methods may achieve impressive performance on average, they perform extremely poorly on a few tasks. To address this, we propose a new and effective method called STARS, which consists of two novel strategies: a shared-unique feature extractor and task-aware prioritized sampling. First, the shared-unique feature extractor learns both shared and task-specific features to enable better synergy of knowledge between different tasks. Second, the task-aware sampling strategy is combined with the prioritized experience replay for efficient learning on tasks with poor performance. The effectiveness and stability of our STARS are verified through experiments on the mainstream Meta-World benchmark. From the results, our STARS statistically outperforms current SOTA methods and alleviates the performance imbalance issue. Besides, we visualize the learned features to support our claims and enhance the interpretability of STARS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge