Shape-independent Hardness Estimation Using Deep Learning and a GelSight Tactile Sensor

Paper and Code

Apr 13, 2017

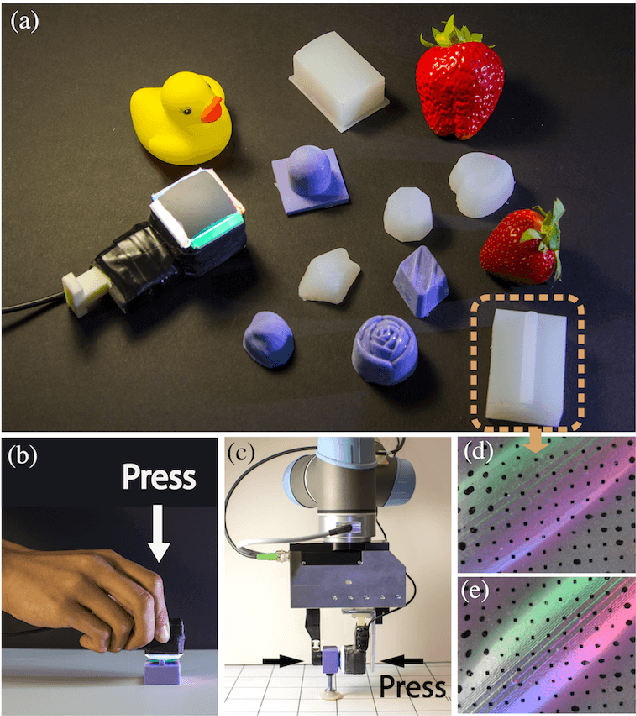

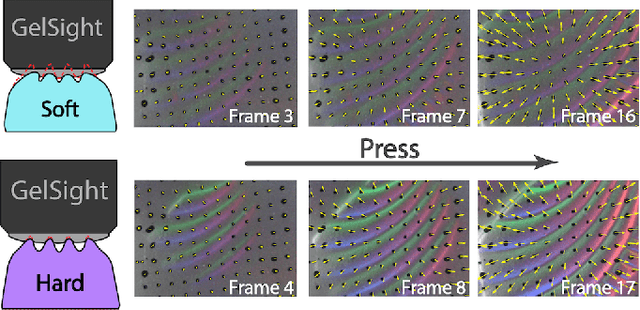

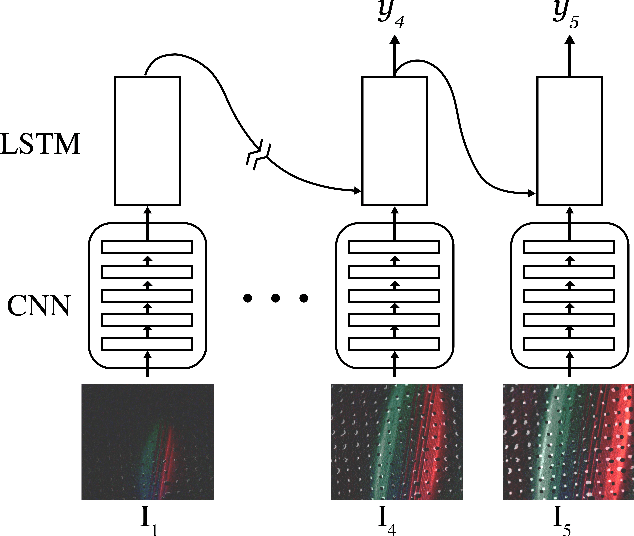

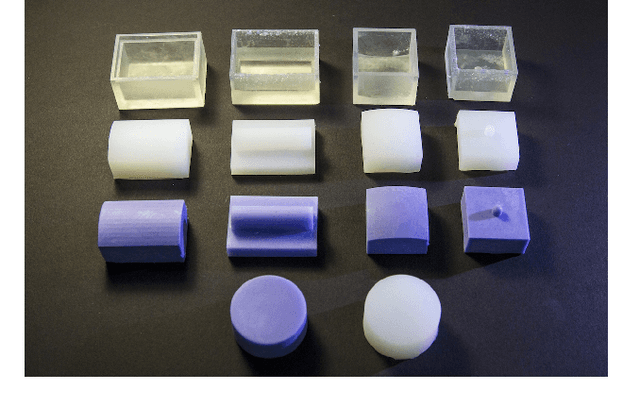

Hardness is among the most important attributes of an object that humans learn about through touch. However, approaches for robots to estimate hardness are limited, due to the lack of information provided by current tactile sensors. In this work, we address these limitations by introducing a novel method for hardness estimation, based on the GelSight tactile sensor, and the method does not require accurate control of contact conditions or the shape of objects. A GelSight has a soft contact interface, and provides high resolution tactile images of contact geometry, as well as contact force and slip conditions. In this paper, we try to use the sensor to measure hardness of objects with multiple shapes, under a loosely controlled contact condition. The contact is made manually or by a robot hand, while the force and trajectory are unknown and uneven. We analyze the data using a deep constitutional (and recurrent) neural network. Experiments show that the neural net model can estimate the hardness of objects with different shapes and hardness ranging from 8 to 87 in Shore 00 scale.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge