Semantic Distribution-aware Contrastive Adaptation for Semantic Segmentation

Paper and Code

May 11, 2021

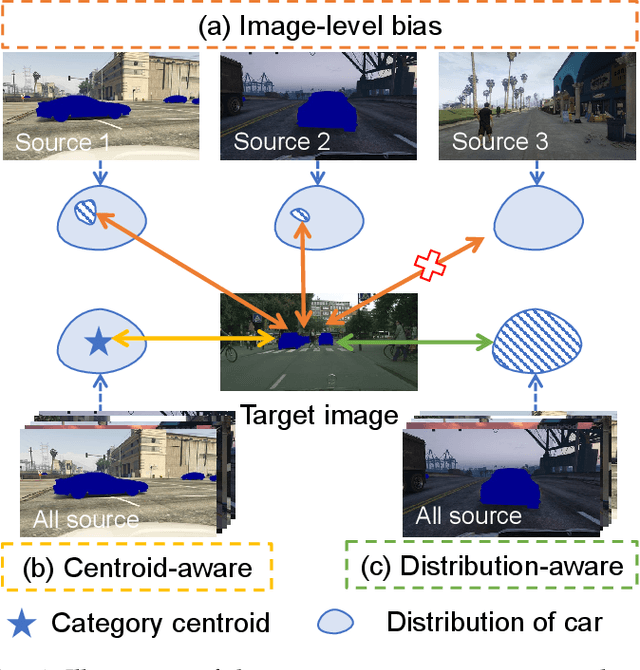

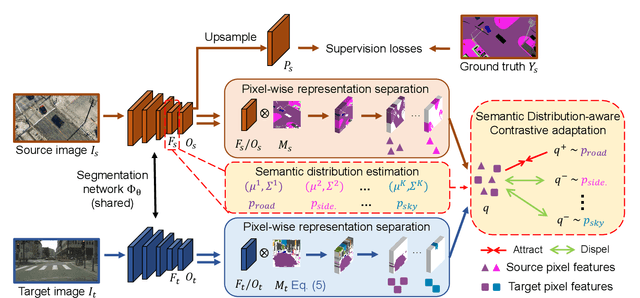

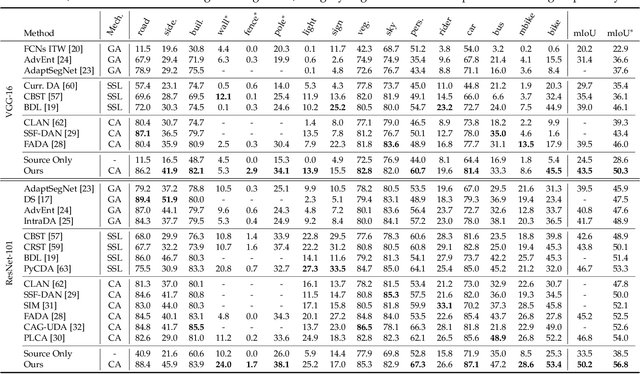

Domain adaptive semantic segmentation refers to making predictions on a certain target domain with only annotations of a specific source domain. Current state-of-the-art works suggest that performing category alignment can alleviate domain shift reasonably. However, they are mainly based on image-to-image adversarial training and little consideration is given to semantic variations of an object among images, failing to capture a comprehensive picture of different categories. This motivates us to explore a holistic representative, the semantic distribution from each category in source domain, to mitigate the problem above. In this paper, we present semantic distribution-aware contrastive adaptation algorithm that enables pixel-wise representation alignment under the guidance of semantic distributions. Specifically, we first design a pixel-wise contrastive loss by considering the correspondences between semantic distributions and pixel-wise representations from both domains. Essentially, clusters of pixel representations from the same category should cluster together and those from different categories should spread out. Next, an upper bound on this formulation is derived by involving the learning of an infinite number of (dis)similar pairs, making it efficient. Finally, we verify that SDCA can further improve segmentation accuracy when integrated with the self-supervised learning. We evaluate SDCA on multiple benchmarks, achieving considerable improvements over existing algorithms.The code is publicly available at https://github.com/BIT-DA/SDCA

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge