Self-Supervised Learning Framework for Remote Heart Rate Estimation Using Spatiotemporal Augmentation

Paper and Code

Jul 16, 2021

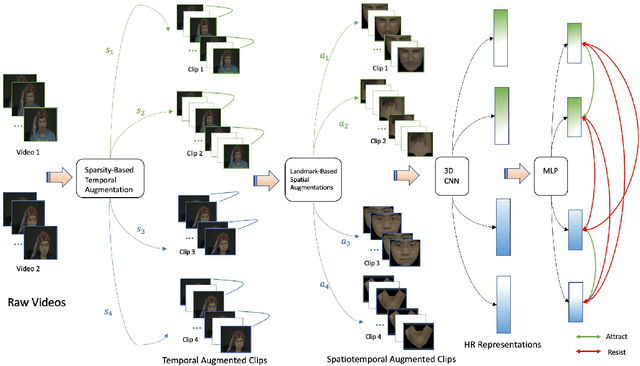

Recent supervised deep learning methods have shown that heart rate can be measured remotely using facial videos. However, the performance of these supervised method are dependent on the availability of large-scale labelled data and they have been limited to 2D deep learning architectures that do not fully exploit the 3D spatiotemporal information. To solve this problem, we present a novel 3D self-supervised spatiotemporal learning framework for remote HR estimation on facial videos. Concretely, we propose a landmark-based spatial augmentation which splits the face into several informative parts based on the Shafer's dichromatic reflection model and a novel sparsity-based temporal augmentation exploiting Nyquist-Shannon sampling theorem to enhance the signal modelling ability. We evaluated our method on 3 public datasets and outperformed other self-supervised methods and achieved competitive accuracy with the state-of-the-art supervised methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge