Self-supervised Graph Learning for Occasional Group Recommendation

Paper and Code

Dec 04, 2021

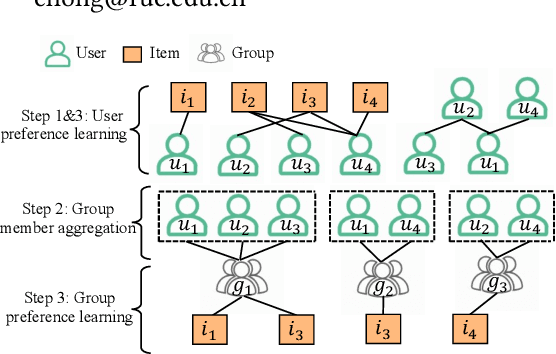

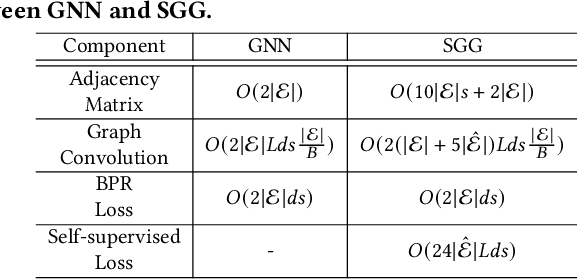

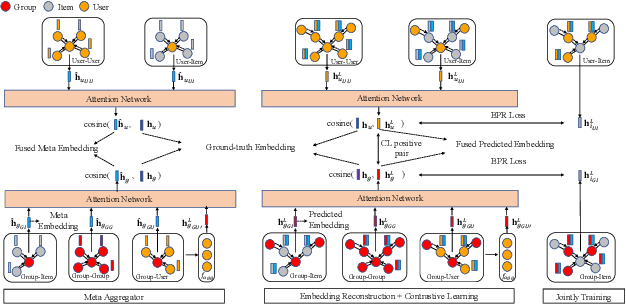

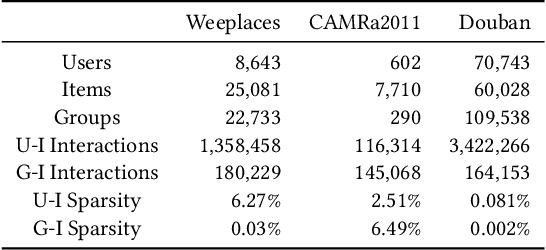

We study the problem of recommending items to occasional groups (a.k.a. cold-start groups), where the occasional groups are formed ad-hoc and have few or no historical interacted items. Due to the extreme sparsity issue of the occasional groups' interactions with items, it is difficult to learn high-quality embeddings for these occasional groups. Despite the recent advances on Graph Neural Networks (GNNs) incorporate high-order collaborative signals to alleviate the problem, the high-order cold-start neighbors are not explicitly considered during the graph convolution in GNNs. This paper proposes a self-supervised graph learning paradigm, which jointly trains the backbone GNN model to reconstruct the group/user/item embeddings under the meta-learning setting, such that it can directly improve the embedding quality and can be easily adapted to the new occasional groups. To further reduce the impact from the cold-start neighbors, we incorporate a self-attention-based meta aggregator to enhance the aggregation ability of each graph convolution step. Besides, we add a contrastive learning (CL) adapter to explicitly consider the correlations between the group and non-group members. Experimental results on three public recommendation datasets show the superiority of our proposed model against the state-of-the-art group recommendation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge