Self-Balanced Dropout

Paper and Code

Aug 06, 2019

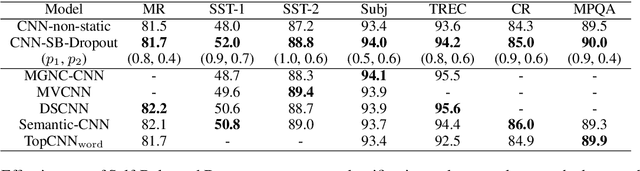

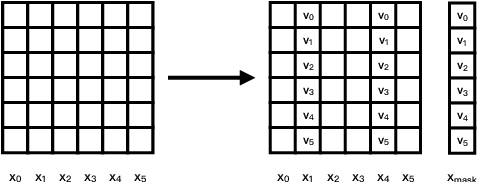

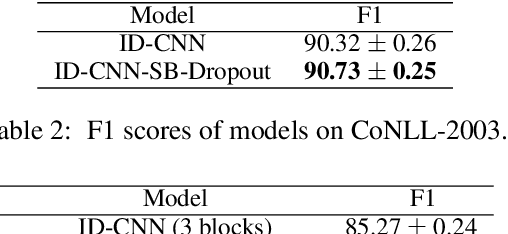

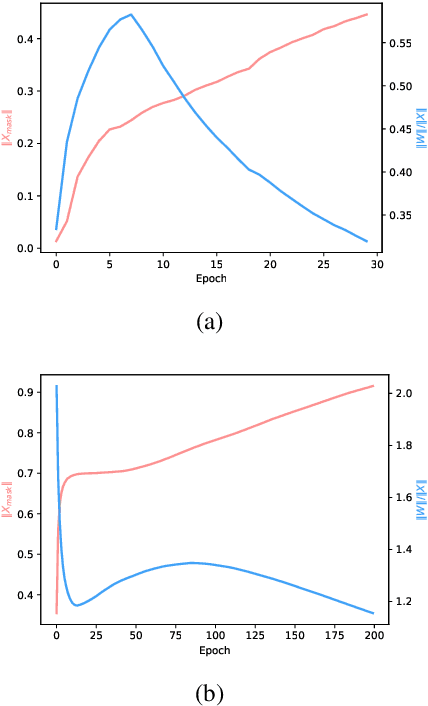

Dropout is known as an effective way to reduce overfitting via preventing co-adaptations of units. In this paper, we theoretically prove that the co-adaptation problem still exists after using dropout due to the correlations among the inputs. Based on the proof, we further propose Self-Balanced Dropout, a novel dropout method which uses a trainable variable to balance the influence of the input correlation on parameter update. We evaluate Self-Balanced Dropout on a range of tasks with both simple and complex models. The experimental results show that the mechanism can effectively solve the co-adaption problem to some extent and significantly improve the performance on all tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge