Self-adjusting Population Sizes for the $$-EA on Monotone Functions

Paper and Code

Apr 01, 2022

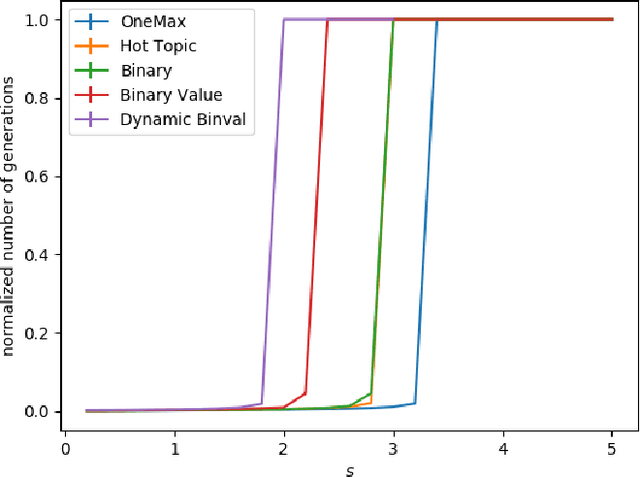

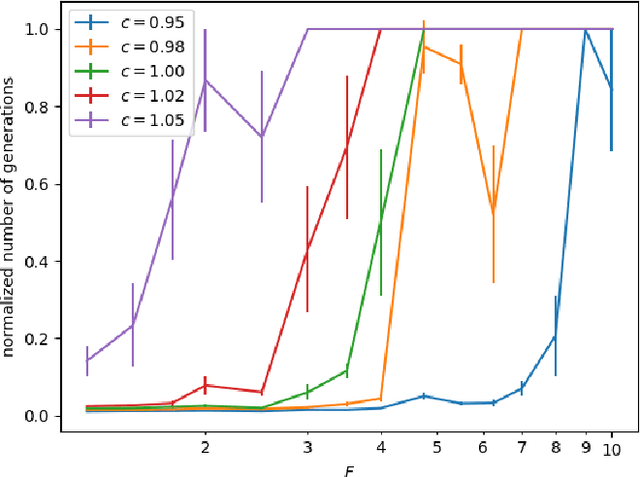

We study the $(1,\lambda)$-EA with mutation rate $c/n$ for $c\le 1$, where the population size is adaptively controlled with the $(1:s+1)$-success rule. Recently, Hevia Fajardo and Sudholt have shown that this setup with $c=1$ is efficient on \onemax for $s<1$, but inefficient if $s \ge 18$. Surprisingly, the hardest part is not close to the optimum, but rather at linear distance. We show that this behavior is not specific to \onemax. If $s$ is small, then the algorithm is efficient on all monotone functions, and if $s$ is large, then it needs superpolynomial time on all monotone functions. In the former case, for $c<1$ we show a $O(n)$ upper bound for the number of generations and $O(n\log n)$ for the number of function evaluations, and for $c=1$ we show $O(n\log n)$ generations and $O(n^2\log\log n)$ evaluations. We also show formally that optimization is always fast, regardless of $s$, if the algorithm starts in proximity of the optimum. All results also hold in a dynamic environment where the fitness function changes in each generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge