Score-Based Generative Models for Molecule Generation

Paper and Code

Mar 07, 2022

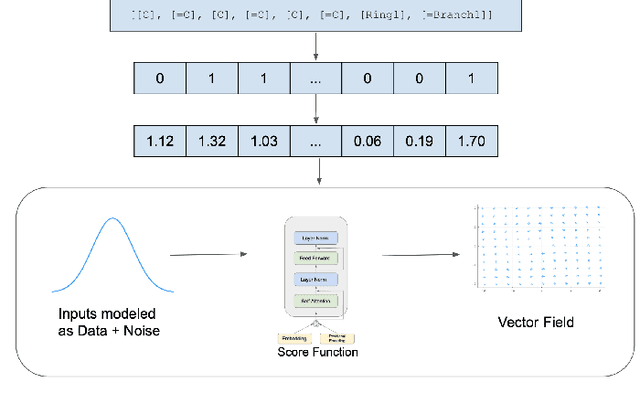

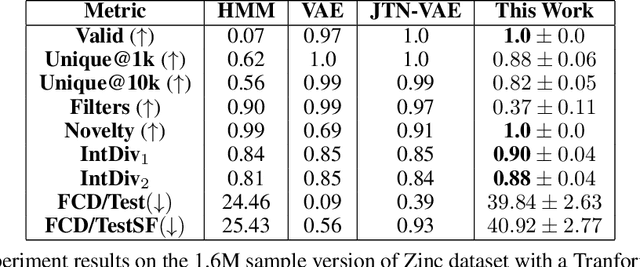

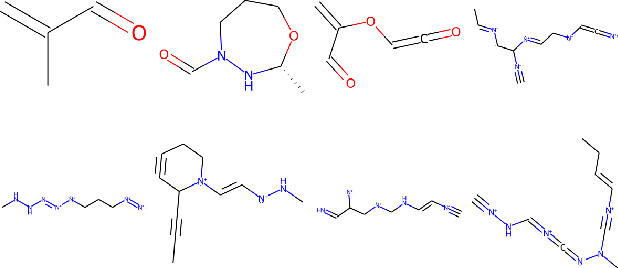

Recent advances in generative models have made exploring design spaces easier for de novo molecule generation. However, popular generative models like GANs and normalizing flows face challenges such as training instabilities due to adversarial training and architectural constraints, respectively. Score-based generative models sidestep these challenges by modelling the gradient of the log probability density using a score function approximation, as opposed to modelling the density function directly, and sampling from it using annealed Langevin Dynamics. We believe that score-based generative models could open up new opportunities in molecule generation due to their architectural flexibility, such as replacing the score function with an SE(3) equivariant model. In this work, we lay the foundations by testing the efficacy of score-based models for molecule generation. We train a Transformer-based score function on Self-Referencing Embedded Strings (SELFIES) representations of 1.5 million samples from the ZINC dataset and use the Moses benchmarking framework to evaluate the generated samples on a suite of metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge