Scientific Inference With Interpretable Machine Learning: Analyzing Models to Learn About Real-World Phenomena

Paper and Code

Jun 11, 2022

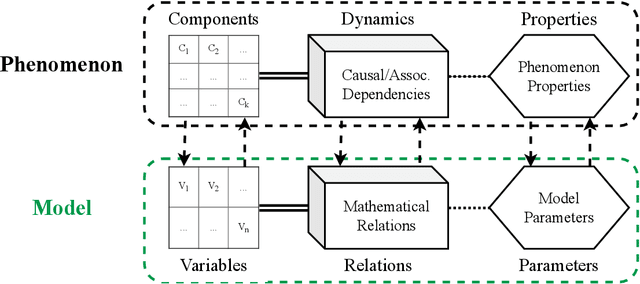

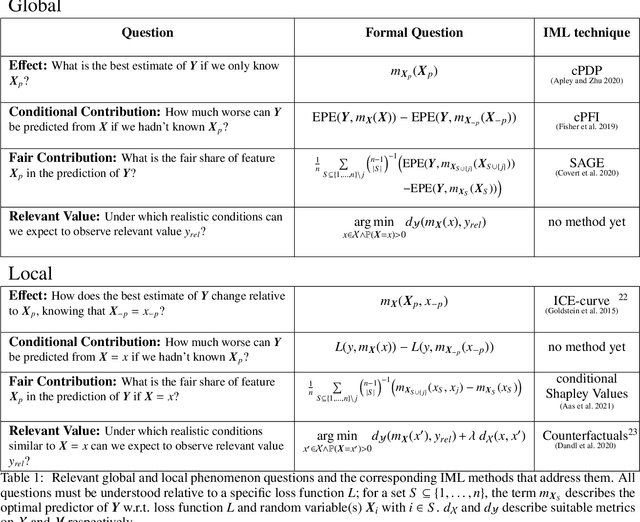

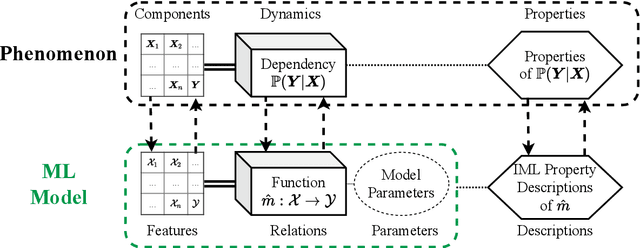

Interpretable machine learning (IML) is concerned with the behavior and the properties of machine learning models. Scientists, however, are only interested in the model as a gateway to understanding the modeled phenomenon. We show how to develop IML methods such that they allow insight into relevant phenomenon properties. We argue that current IML research conflates two goals of model-analysis -- model audit and scientific inference. Thereby, it remains unclear if model interpretations have corresponding phenomenon interpretation. Building on statistical decision theory, we show that ML model analysis allows to describe relevant aspects of the joint data probability distribution. We provide a five-step framework for constructing IML descriptors that can help in addressing scientific questions, including a natural way to quantify epistemic uncertainty. Our phenomenon-centric approach to IML in science clarifies: the opportunities and limitations of IML for inference; that conditional not marginal sampling is required; and, the conditions under which we can trust IML methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge