Scientific Discovery and the Cost of Measurement -- Balancing Information and Cost in Reinforcement Learning

Paper and Code

Dec 14, 2021

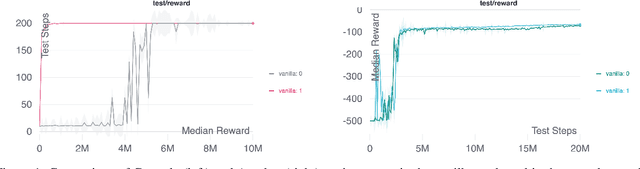

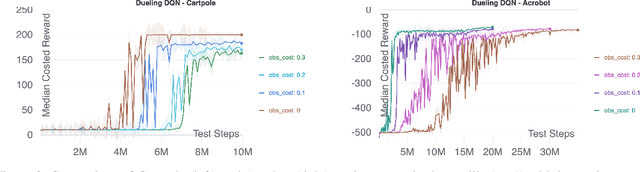

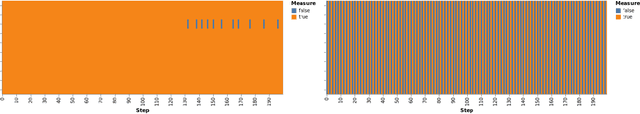

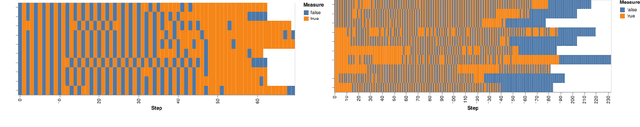

The use of reinforcement learning (RL) in scientific applications, such as materials design and automated chemistry, is increasing. A major challenge, however, lies in fact that measuring the state of the system is often costly and time consuming in scientific applications, whereas policy learning with RL requires a measurement after each time step. In this work, we make the measurement costs explicit in the form of a costed reward and propose a framework that enables off-the-shelf deep RL algorithms to learn a policy for both selecting actions and determining whether or not to measure the current state of the system at each time step. In this way, the agents learn to balance the need for information with the cost of information. Our results show that when trained under this regime, the Dueling DQN and PPO agents can learn optimal action policies whilst making up to 50\% fewer state measurements, and recurrent neural networks can produce a greater than 50\% reduction in measurements. We postulate the these reduction can help to lower the barrier to applying RL to real-world scientific applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge