SCFusion: Real-time Incremental Scene Reconstruction with Semantic Completion

Paper and Code

Oct 26, 2020

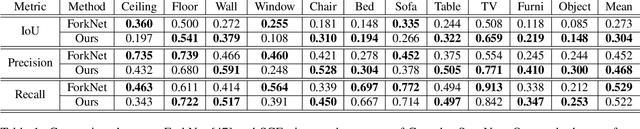

Real-time scene reconstruction from depth data inevitably suffers from occlusion, thus leading to incomplete 3D models. Partial reconstructions, in turn, limit the performance of algorithms that leverage them for applications in the context of, e.g., augmented reality, robotic navigation, and 3D mapping. Most methods address this issue by predicting the missing geometry as an offline optimization, thus being incompatible with real-time applications. We propose a framework that ameliorates this issue by performing scene reconstruction and semantic scene completion jointly in an incremental and real-time manner, based on an input sequence of depth maps. Our framework relies on a novel neural architecture designed to process occupancy maps and leverages voxel states to accurately and efficiently fuse semantic completion with the 3D global model. We evaluate the proposed approach quantitatively and qualitatively, demonstrating that our method can obtain accurate 3D semantic scene completion in real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge