Scene Editing as Teleoperation: A Case Study in 6DoF Kit Assembly

Paper and Code

Oct 09, 2021

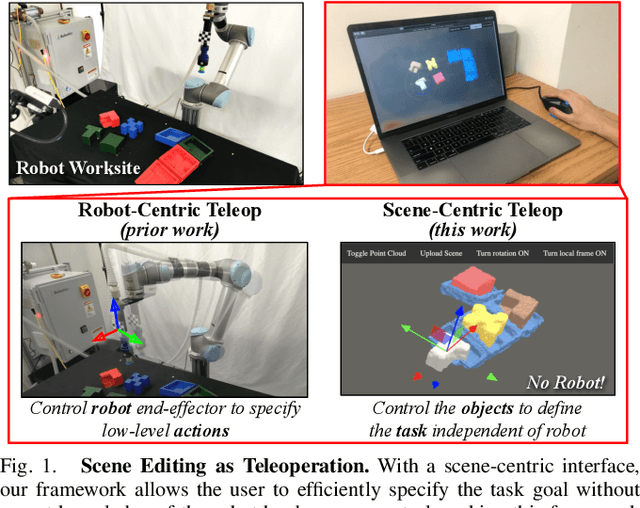

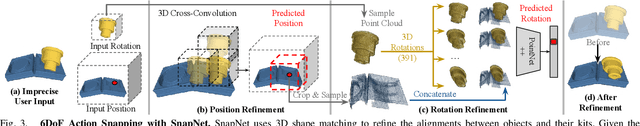

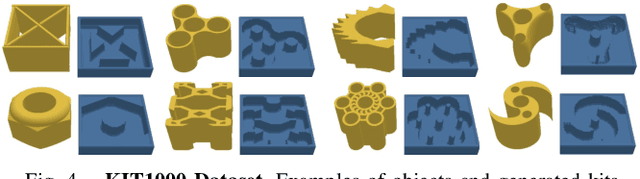

Studies in robot teleoperation have been centered around action specifications -- from continuous joint control to discrete end-effector pose control. However, these robot-centric interfaces often require skilled operators with extensive robotics expertise. To make teleoperation accessible to non-expert users, we propose the framework "Scene Editing as Teleoperation" (SEaT), where the key idea is to transform the traditional "robot-centric" interface into a "scene-centric" interface -- instead of controlling the robot, users focus on specifying the task's goal by manipulating digital twins of the real-world objects. As a result, a user can perform teleoperation without any expert knowledge of the robot hardware. To achieve this goal, we utilize a category-agnostic scene-completion algorithm that translates the real-world workspace (with unknown objects) into a manipulable virtual scene representation and an action-snapping algorithm that refines the user input before generating the robot's action plan. To train the algorithms, we procedurally generated a large-scale, diverse kit-assembly dataset that contains object-kit pairs that mimic real-world object-kitting tasks. Our experiments in simulation and on a real-world system demonstrate that our framework improves both the efficiency and success rate for 6DoF kit-assembly tasks. A user study demonstrates that SEaT framework participants achieve a higher task success rate and report a lower subjective workload compared to an alternative robot-centric interface. Video can be found at https://www.youtube.com/watch?v=-NdR3mkPbQQ .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge