Scaling Object Detection by Transferring Classification Weights

Paper and Code

Sep 15, 2019

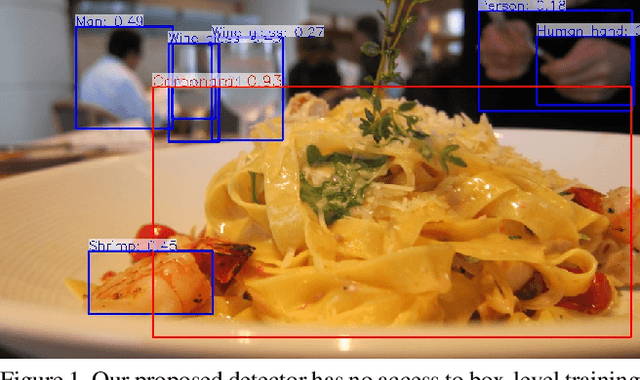

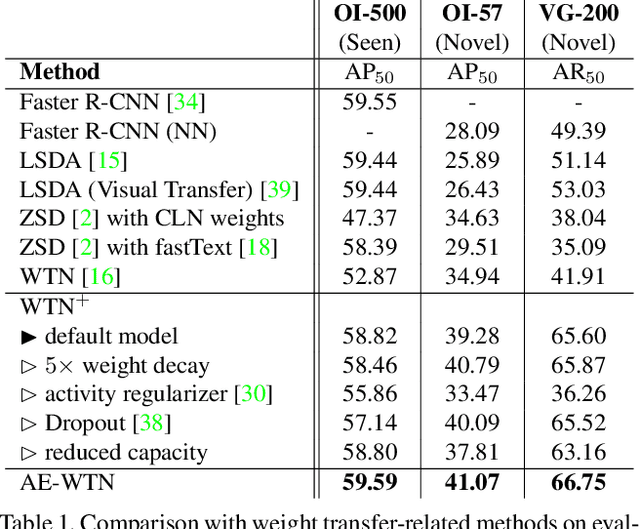

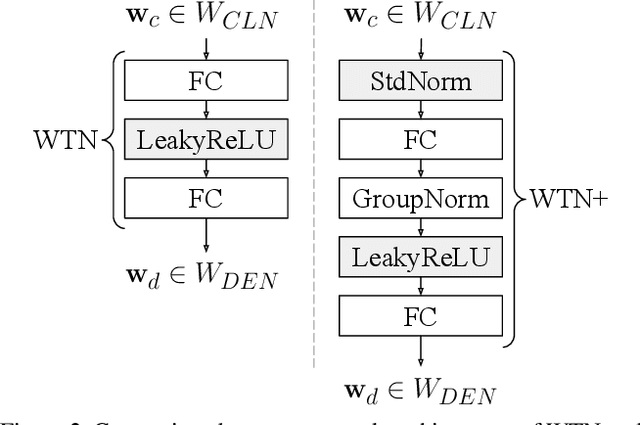

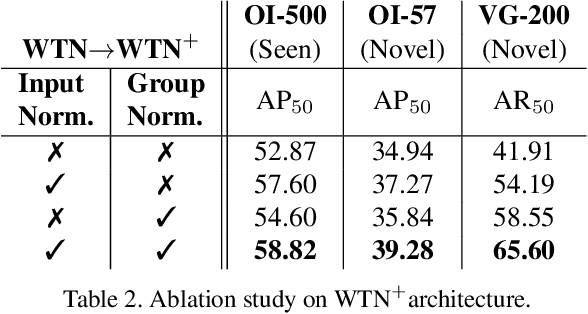

Large scale object detection datasets are constantly increasing their size in terms of the number of classes and annotations count. Yet, the number of object-level categories annotated in detection datasets is an order of magnitude smaller than image-level classification labels. State-of-the art object detection models are trained in a supervised fashion and this limits the number of object classes they can detect. In this paper, we propose a novel weight transfer network (WTN) to effectively and efficiently transfer knowledge from classification network's weights to detection network's weights to allow detection of novel classes without box supervision. We first introduce input and feature normalization schemes to curb the under-fitting during training of a vanilla WTN. We then propose autoencoder-WTN (AE-WTN) which uses reconstruction loss to preserve classification network's information over all classes in the target latent space to ensure generalization to novel classes. Compared to vanilla WTN, AE-WTN obtains absolute performance gains of 6% on two Open Images evaluation sets with 500 seen and 57 novel classes respectively, and 25% on a Visual Genome evaluation set with 200 novel classes. The code is available at https://github.com/xternalz/AE-WTN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge