Scaling Novel Object Detection with Weakly Supervised Detection Transformers

Paper and Code

Jul 11, 2022

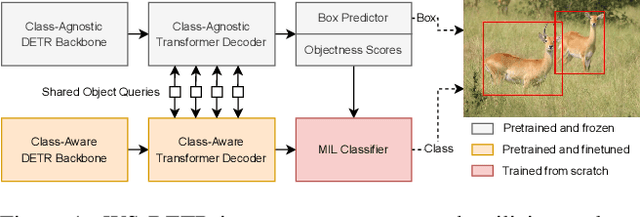

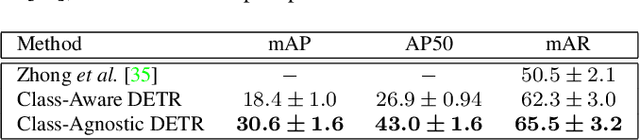

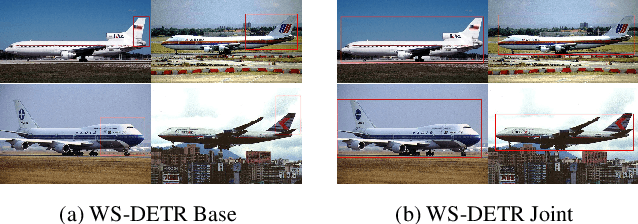

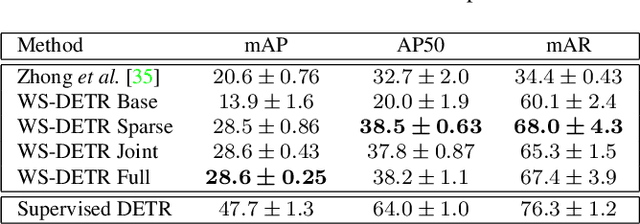

Weakly supervised object detection (WSOD) enables object detectors to be trained using image-level class labels. However, the practical application of current WSOD models is limited, as they operate at small scales and require extensive training and refinement. We propose the Weakly Supervised Detection Transformer, which enables efficient knowledge transfer from a large-scale pretraining dataset to WSOD finetuning on hundreds of novel objects. We leverage pretrained knowledge to improve the multiple instance learning framework used in WSOD, and experiments show our approach outperforms the state-of-the-art on datasets with twice the novel classes than previously shown.

* CVPR 2022 Workshop on Attention and Transformers in Vision

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge