Scalable Thompson Sampling using Sparse Gaussian Process Models

Paper and Code

Jun 09, 2020

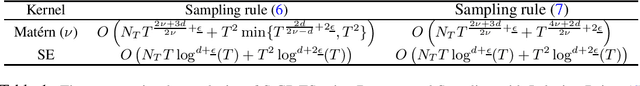

Thompson Sampling (TS) with Gaussian Process (GP) models is a powerful tool for optimizing non-convex objective functions. Despite favourable theoretical properties, the computational complexity of the standard algorithms quickly becomes prohibitive as the number of observation points grows. Scalable TS methods can be implemented using sparse GP models, but at the price of an approximation error that invalidates the existing regret bounds. Here, we prove regret bounds for TS based on approximate GP posteriors, whose application to sparse GPs shows a drastic improvement in computational complexity with no loss in terms of the order of regret performance. In addition, an immediate implication of our results is an improved regret bound for the exact GP-TS. Specifically, we show an $\tilde{O}(\sqrt{\gamma_T T})$ bound on regret that is an $O(\sqrt{\gamma_T})$ improvement over the existing results where $T$ is the time horizon and $\gamma_T$ is an upper bound on the information gain. This improvement is important to ensure sublinear regret bounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge