Safety Enhancement for Deep Reinforcement Learning in Autonomous Separation Assurance

Paper and Code

May 05, 2021

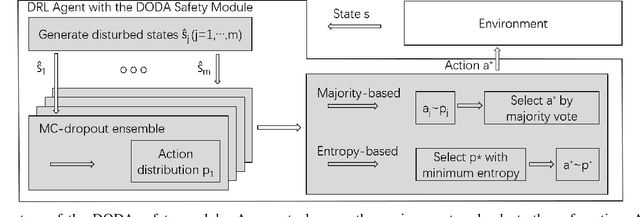

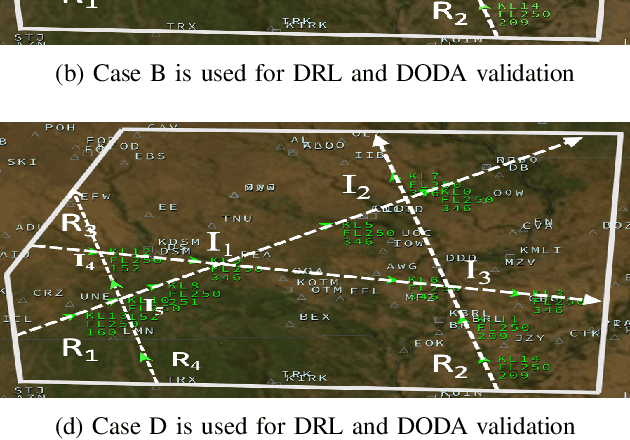

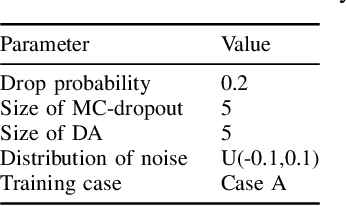

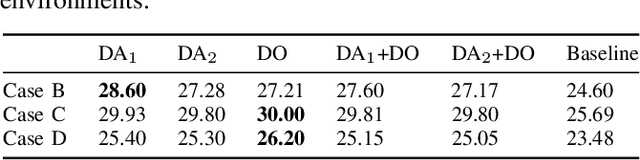

The separation assurance task will be extremely challenging for air traffic controllers in a complex and high density airspace environment. Deep reinforcement learning (DRL) was used to develop an autonomous separation assurance framework in our previous work where the learned model advised speed maneuvers. In order to improve the safety of this model in unseen environments with uncertainties, in this work we propose a safety module for DRL in autonomous separation assurance applications. The proposed module directly addresses both model uncertainty and state uncertainty to improve safety. Our safety module consists of two sub-modules: (1) the state safety sub-module is based on the execution-time data augmentation method to introduce state disturbances in the model input state; (2) the model safety sub-module is a Monte-Carlo dropout extension that learns the posterior distribution of the DRL model policy. We demonstrate the effectiveness of the two sub-modules in an open-source air traffic simulator with challenging environment settings. Through extensive numerical experiments, our results show that the proposed sub-safety modules help the DRL agent significantly improve its safety performance in an autonomous separation assurance task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge