RSGAN: Face Swapping and Editing using Face and Hair Representation in Latent Spaces

Paper and Code

Apr 18, 2018

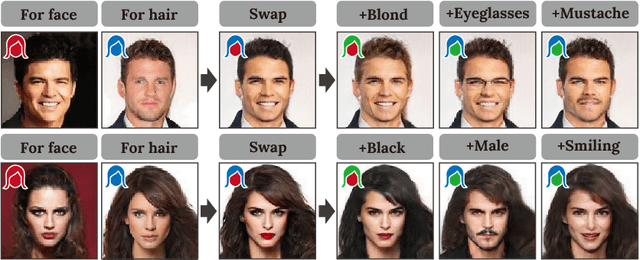

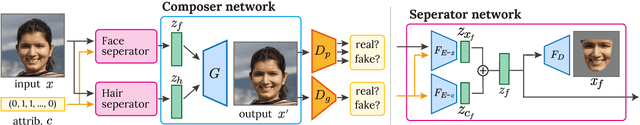

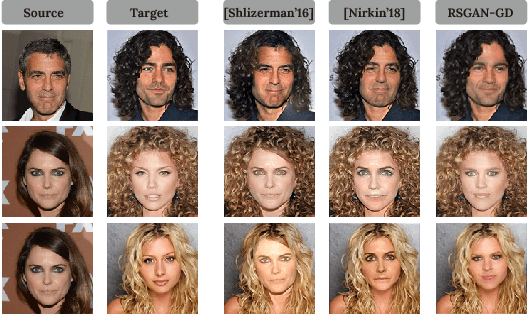

In this paper, we present an integrated system for automatically generating and editing face images through face swapping, attribute-based editing, and random face parts synthesis. The proposed system is based on a deep neural network that variationally learns the face and hair regions with large-scale face image datasets. Different from conventional variational methods, the proposed network represents the latent spaces individually for faces and hairs. We refer to the proposed network as region-separative generative adversarial network (RSGAN). The proposed network independently handles face and hair appearances in the latent spaces, and then, face swapping is achieved by replacing the latent-space representations of the faces, and reconstruct the entire face image with them. This approach in the latent space robustly performs face swapping even for images which the previous methods result in failure due to inappropriate fitting or the 3D morphable models. In addition, the proposed system can further edit face-swapped images with the same network by manipulating visual attributes or by composing them with randomly generated face or hair parts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge