Robust Transfer Learning for Active Level Set Estimation with Locally Adaptive Gaussian Process Prior

Paper and Code

Oct 08, 2024

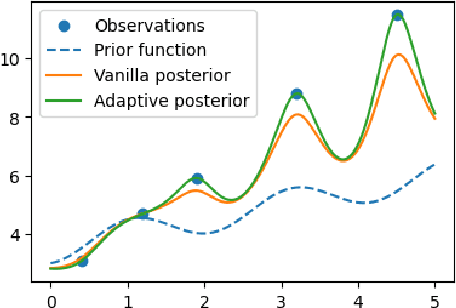

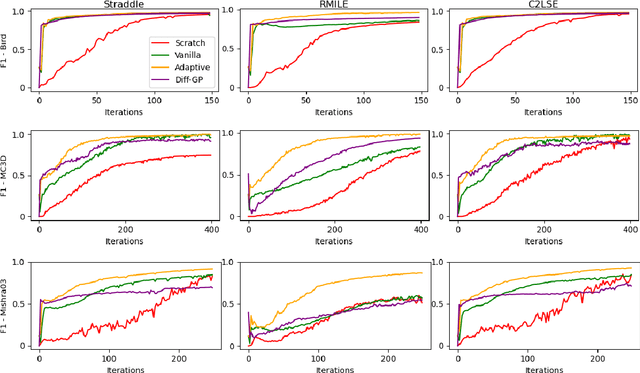

The objective of active level set estimation for a black-box function is to precisely identify regions where the function values exceed or fall below a specified threshold by iteratively performing function evaluations to gather more information about the function. This becomes particularly important when function evaluations are costly, drastically limiting our ability to acquire large datasets. A promising way to sample-efficiently model the black-box function is by incorporating prior knowledge from a related function. However, this approach risks slowing down the estimation task if the prior knowledge is irrelevant or misleading. In this paper, we present a novel transfer learning method for active level set estimation that safely integrates a given prior knowledge while constantly adjusting it to guarantee a robust performance of a level set estimation algorithm even when the prior knowledge is irrelevant. We theoretically analyze this algorithm to show that it has a better level set convergence compared to standard transfer learning approaches that do not make any adjustment to the prior. Additionally, extensive experiments across multiple datasets confirm the effectiveness of our method when applied to various different level set estimation algorithms as well as different transfer learning scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge