Robust online identification of thermal models for in-production HPC clusters with machine learning-based data selection

Paper and Code

Oct 03, 2018

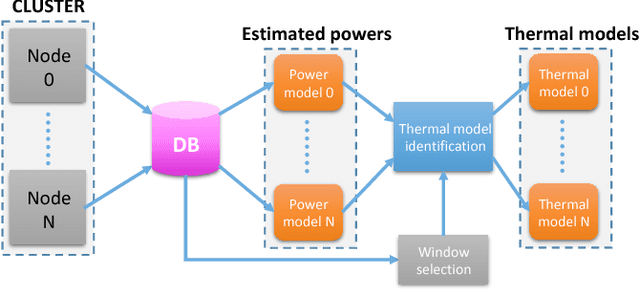

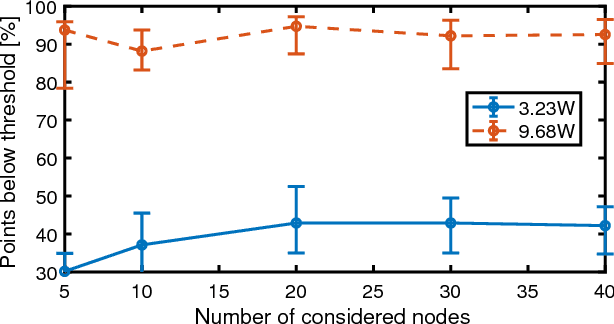

Power and thermal management are critical components of high performance computing (HPC) systems, due to their high power density and large total power consumption. The assessment of thermal dissipation by means of compact models directly from the thermal response of the final device enables more robust and precise thermal control strategies as well as automated diagnosis. However, when dealing with large scale systems "in production" the accuracy of learned thermal models depends on the dynamics of the power excitation, which depends also on the executed workload, and measurement nonidealities, such as quantization. In this paper we show that, using an advanced system identification algorithm, we are able to generate very accurate thermal models (average error lower than our sensors quantization step of 1{\deg}C) for a large scale HPC system on real workloads. However, we also show that: 1) not all real workloads allow for the identification of a good model; 2) starting from the theory of system identification it is very difficult to evaluate if a trace of data leads to a good estimated model. We then propose and validate a set of techniques based on machine learning and deep learning algorithms for the choice of data traces to be used for model identification. We also show that only via deep learning techniques these traces can be correctly chosen up to 96% of the times.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge