Robust Learning of Recurrent Neural Networks in Presence of Exogenous Noise

Paper and Code

May 04, 2021

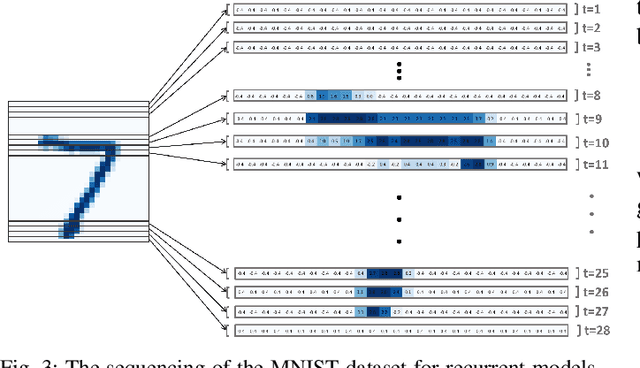

Recurrent Neural networks (RNN) have shown promising potential for learning dynamics of sequential data. However, artificial neural networks are known to exhibit poor robustness in presence of input noise, where the sequential architecture of RNNs exacerbates the problem. In this paper, we will use ideas from control and estimation theories to propose a tractable robustness analysis for RNN models that are subject to input noise. The variance of the output of the noisy system is adopted as a robustness measure to quantify the impact of noise on learning. It is shown that the robustness measure can be estimated efficiently using linearization techniques. Using these results, we proposed a learning method to enhance robustness of a RNN with respect to exogenous Gaussian noise with known statistics. Our extensive simulations on benchmark problems reveal that our proposed methodology significantly improves robustness of recurrent neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge