Robust Data Preprocessing for Machine-Learning-Based Disk Failure Prediction in Cloud Production Environments

Paper and Code

Dec 20, 2019

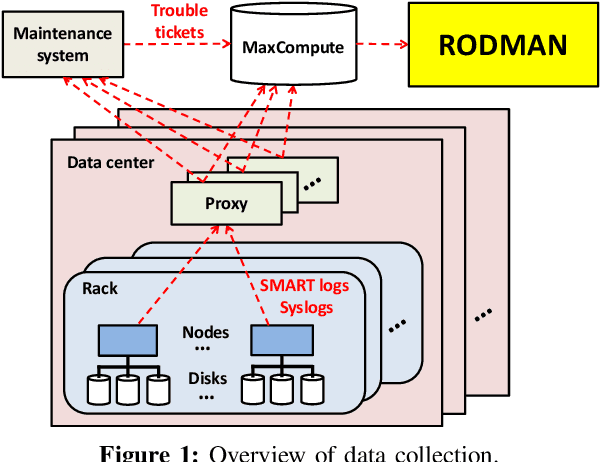

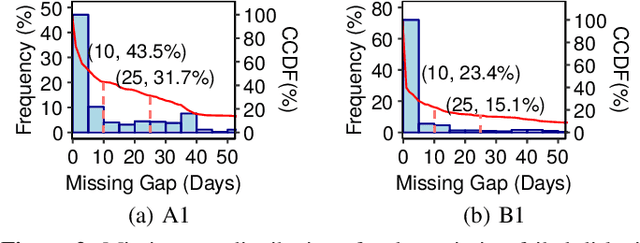

To provide proactive fault tolerance for modern cloud data centers, extensive studies have proposed machine learning (ML) approaches to predict imminent disk failures for early remedy and evaluated their approaches directly on public datasets (e.g., Backblaze SMART logs). However, in real-world production environments, the data quality is imperfect (e.g., inaccurate labeling, missing data samples, and complex failure types), thereby degrading the prediction accuracy. We present RODMAN, a robust data preprocessing pipeline that refines data samples before feeding them into ML models. We start with a large-scale trace-driven study of over three million disks from Alibaba Cloud's data centers, and motivate the practical challenges in ML-based disk failure prediction. We then design RODMAN with three data preprocessing echniques, namely failure-type filtering, spline-based data filling, and automated pre-failure backtracking, that are applicable for general ML models. Evaluation on both the Alibaba and Backblaze datasets shows that RODMAN improves the prediction accuracy compared to without data preprocessing under various settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge