Robust and Provable Guarantees for Sparse Random Embeddings

Paper and Code

Feb 22, 2022

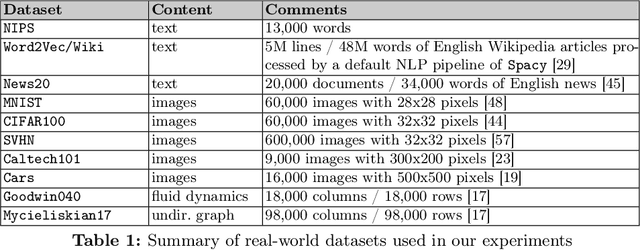

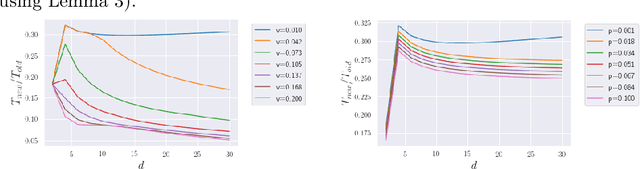

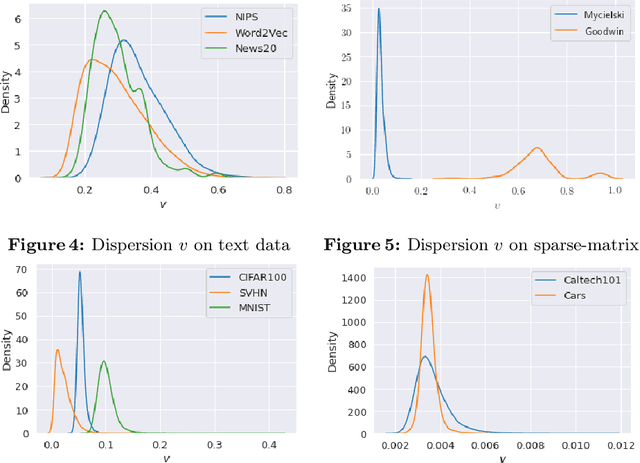

In this work, we improve upon the guarantees for sparse random embeddings, as they were recently provided and analyzed by Freksen at al. (NIPS'18) and Jagadeesan (NIPS'19). Specifically, we show that (a) our bounds are explicit as opposed to the asymptotic guarantees provided previously, and (b) our bounds are guaranteed to be sharper by practically significant constants across a wide range of parameters, including the dimensionality, sparsity and dispersion of the data. Moreover, we empirically demonstrate that our bounds significantly outperform prior works on a wide range of real-world datasets, such as collections of images, text documents represented as bags-of-words, and text sequences vectorized by neural embeddings. Behind our numerical improvements are techniques of broader interest, which improve upon key steps of previous analyses in terms of (c) tighter estimates for certain types of quadratic chaos, (d) establishing extreme properties of sparse linear forms, and (e) improvements on bounds for the estimation of sums of independent random variables.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge