RIANN -- A Robust Neural Network Outperforms Attitude Estimation Filters

Paper and Code

May 05, 2021

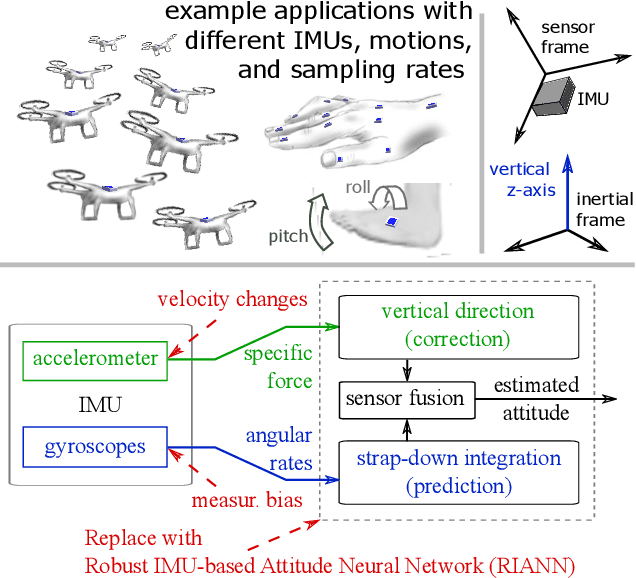

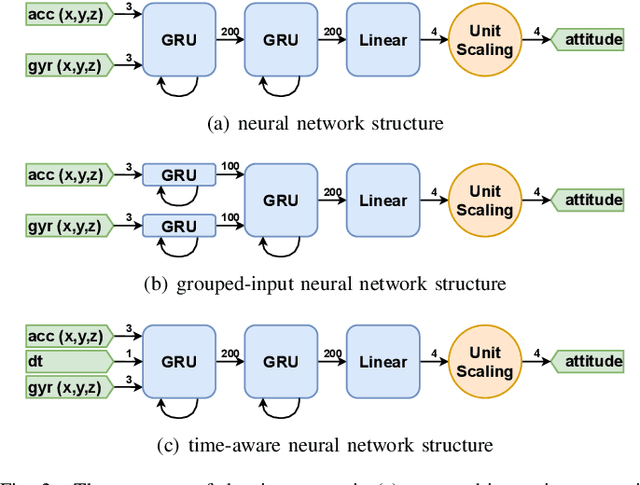

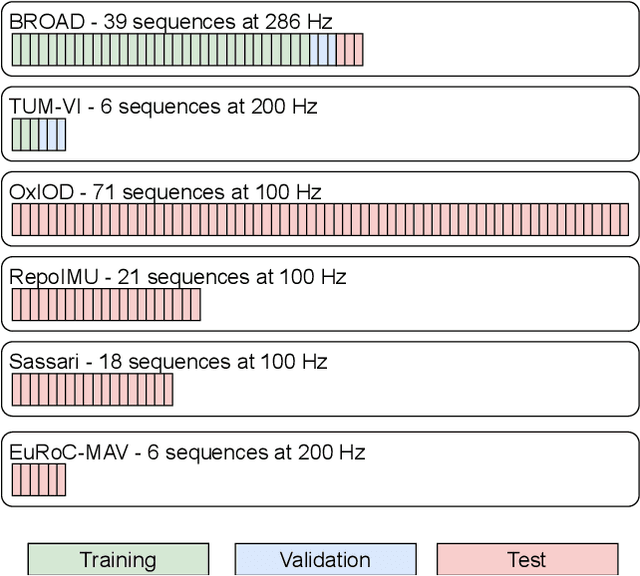

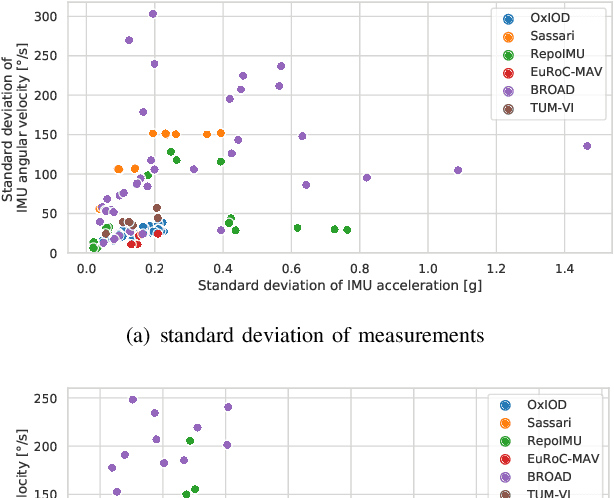

Inertial-sensor-based attitude estimation is a crucial technology in various applications, from human motion tracking to autonomous aerial and ground vehicles. Application scenarios differ in characteristics of the performed motion, presence of disturbances, and environmental conditions. Since state-of-the-art attitude estimators do not generalize well over these characteristics, their parameters must be tuned for the individual motion characteristics and circumstances. We propose RIANN, a real-time-capable neural network for robust IMU-based attitude estimation, which generalizes well across different motion dynamics, environments, and sampling rates, without the need for application-specific adaptations. We exploit two publicly available datasets for the method development and the training, and we add four completely different datasets for evaluation of the trained neural network in three different test scenarios with varying practical relevance. Results show that RIANN performs at least as well as state-of-the-art attitude estimation filters and outperforms them in several cases, even if the filter is tuned on the very same test dataset itself while RIANN has never seen data from that dataset, from the specific application, the same sensor hardware, or the same sampling frequency before. RIANN is expected to enable plug-and-play solutions in numerous applications, especially when accuracy is crucial but no ground-truth data is available for tuning or when motion and disturbance characteristics are uncertain. We made RIANN publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge