Reward Systems for Trustworthy Medical Federated Learning

Paper and Code

May 01, 2022

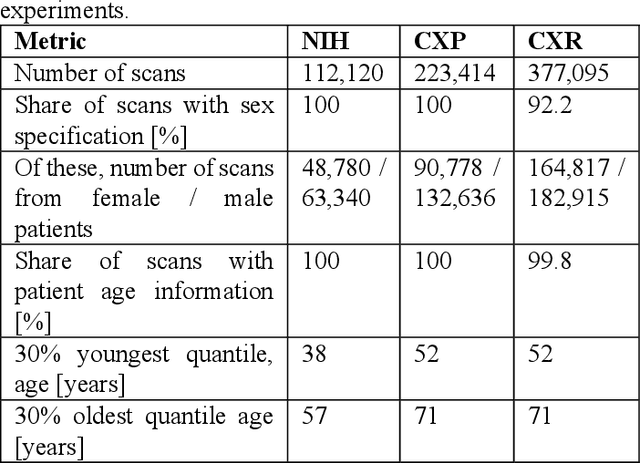

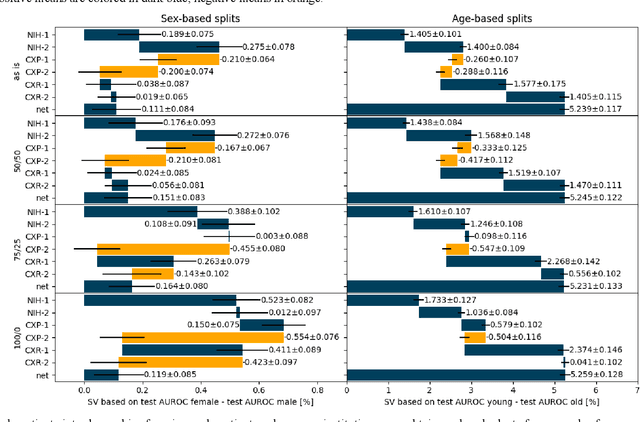

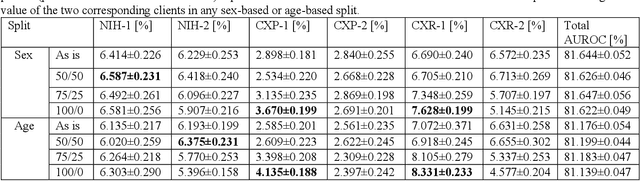

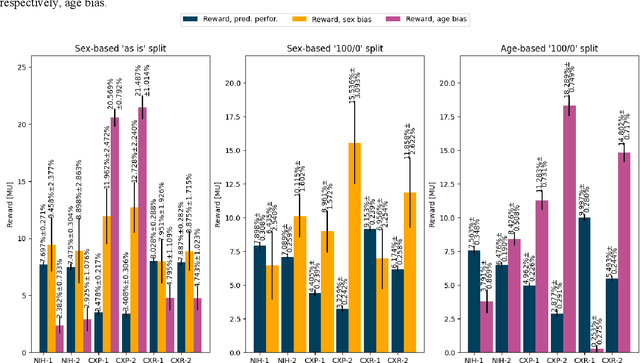

Federated learning (FL) has received high interest from researchers and practitioners to train machine learning (ML) models for healthcare. Ensuring the trustworthiness of these models is essential. Especially bias, defined as a disparity in the model's predictive performance across different subgroups, may cause unfairness against specific subgroups, which is an undesired phenomenon for trustworthy ML models. In this research, we address the question to which extent bias occurs in medical FL and how to prevent excessive bias through reward systems. We first evaluate how to measure the contributions of institutions toward predictive performance and bias in cross-silo medical FL with a Shapley value approximation method. In a second step, we design different reward systems incentivizing contributions toward high predictive performance or low bias. We then propose a combined reward system that incentivizes contributions toward both. We evaluate our work using multiple medical chest X-ray datasets focusing on patient subgroups defined by patient sex and age. Our results show that we can successfully measure contributions toward bias, and an integrated reward system successfully incentivizes contributions toward a well-performing model with low bias. While the partitioning of scans only slightly influences the overall bias, institutions with data predominantly from one subgroup introduce a favorable bias for this subgroup. Our results indicate that reward systems, which focus on predictive performance only, can transfer model bias against patients to an institutional level. Our work helps researchers and practitioners design reward systems for FL with well-aligned incentives for trustworthy ML.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge