Rethinking Learning Dynamics in RL using Adversarial Networks

Paper and Code

Jan 27, 2022

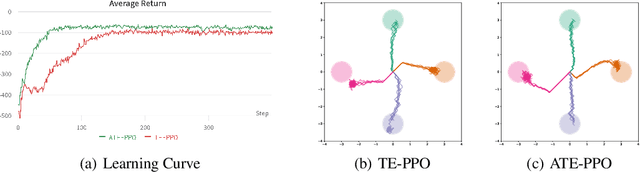

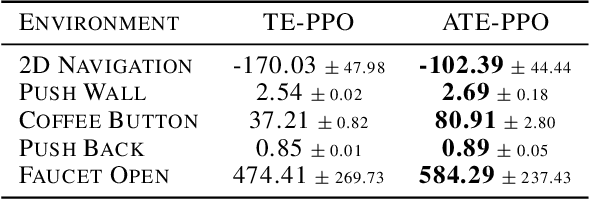

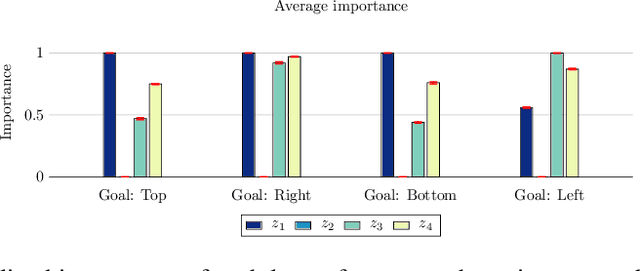

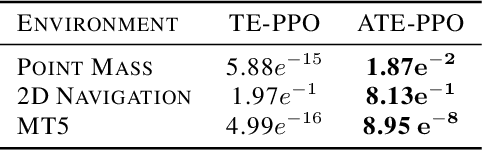

We present a learning mechanism for reinforcement learning of closely related skills parameterized via a skill embedding space. Our approach is grounded on the intuition that nothing makes you learn better than a coevolving adversary. The main contribution of our work is to formulate an adversarial training regime for reinforcement learning with the help of entropy-regularized policy gradient formulation. We also adapt existing measures of causal attribution to draw insights from the skills learned. Our experiments demonstrate that the adversarial process leads to a better exploration of multiple solutions and understanding the minimum number of different skills necessary to solve a given set of tasks.

View paper on

OpenReview

OpenReview

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge