Rethinking Graph Convolutional Networks in Knowledge Graph Completion

Paper and Code

Feb 08, 2022

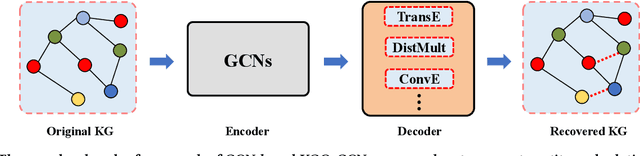

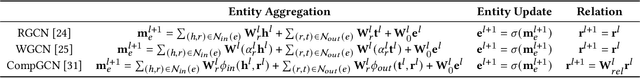

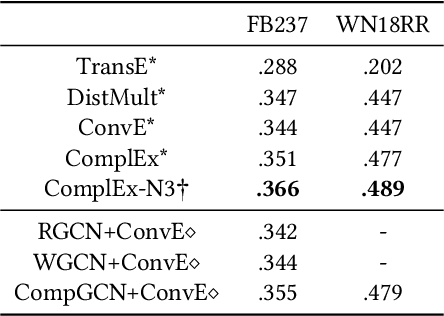

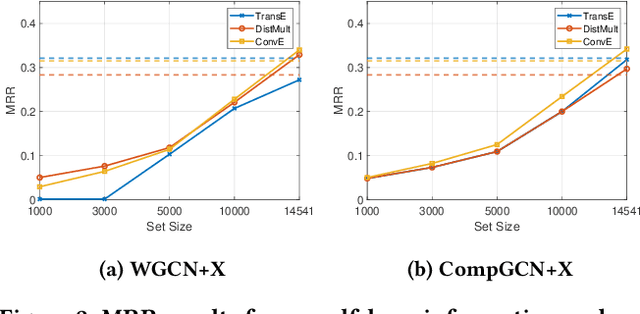

Graph convolutional networks (GCNs) -- which are effective in modeling graph structures -- have been increasingly popular in knowledge graph completion (KGC). GCN-based KGC models first use GCNs to generate expressive entity representations and then use knowledge graph embedding (KGE) models to capture the interactions among entities and relations. However, many GCN-based KGC models fail to outperform state-of-the-art KGE models though introducing additional computational complexity. This phenomenon motivates us to explore the real effect of GCNs in KGC. Therefore, in this paper, we build upon representative GCN-based KGC models and introduce variants to find which factor of GCNs is critical in KGC. Surprisingly, we observe from experiments that the graph structure modeling in GCNs does not have a significant impact on the performance of KGC models, which is in contrast to the common belief. Instead, the transformations for entity representations are responsible for the performance improvements. Based on the observation, we propose a simple yet effective framework named LTE-KGE, which equips existing KGE models with linearly transformed entity embeddings. Experiments demonstrate that LTE-KGE models lead to similar performance improvements with GCN-based KGC methods, while being more computationally efficient. These results suggest that existing GCNs are unnecessary for KGC, and novel GCN-based KGC models should count on more ablation studies to validate their effectiveness. The code of all the experiments is available on GitHub at https://github.com/MIRALab-USTC/GCN4KGC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge