Respiratory Motion Correction in Abdominal MRI using a Densely Connected U-Net with GAN-guided Training

Paper and Code

Jun 24, 2019

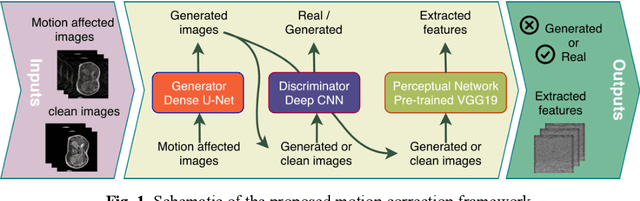

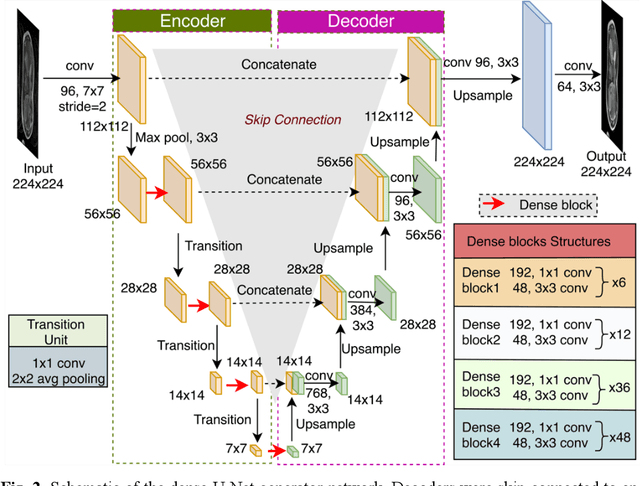

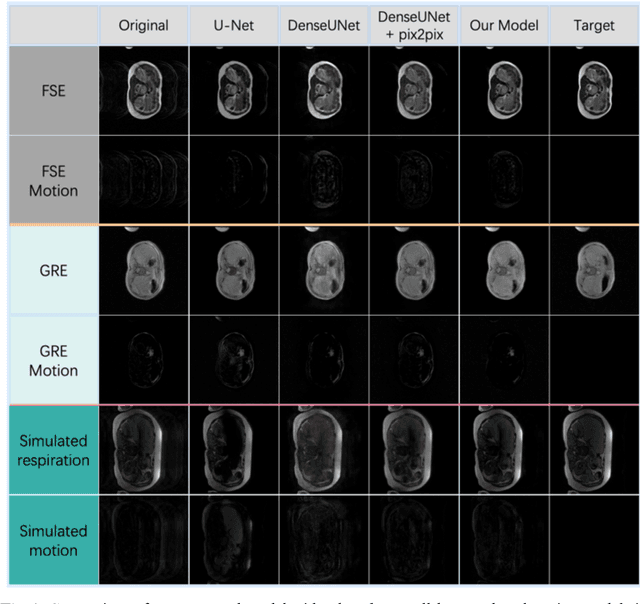

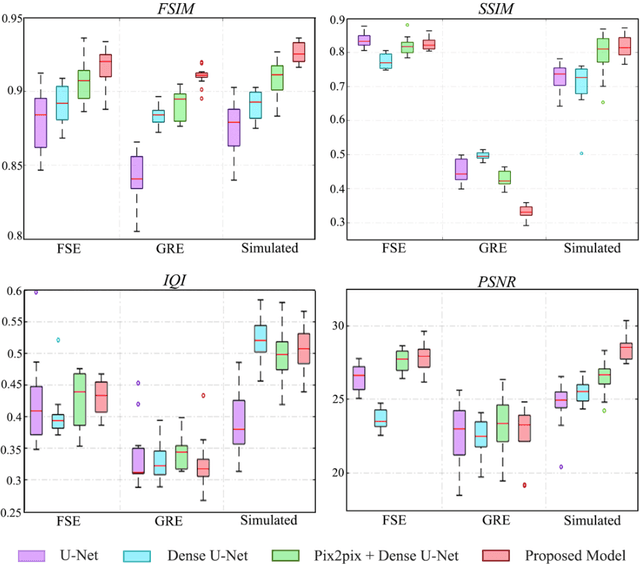

Abdominal magnetic resonance imaging (MRI) provides a straightforward way of characterizing tissue and locating lesions of patients as in standard diagnosis. However, abdominal MRI often suffers from respiratory motion artifacts, which leads to blurring and ghosting that significantly deteriorate the imaging quality. Conventional methods to reduce or eliminate these motion artifacts include breath holding, patient sedation, respiratory gating, and image post-processing, but these strategies inevitably involve extra scanning time and patient discomfort. In this paper, we propose a novel deep-learning-based model to recover MR images from respiratory motion artifacts. The proposed model comprises a densely connected U-net with generative adversarial network (GAN)-guided training and a perceptual loss function. We validate the model using a diverse collection of MRI data that are adversely affected by both synthetic and authentic respiration artifacts. Effective outcomes of motion removal are demonstrated. Our experimental results show the great potential of utilizing deep-learning-based methods in respiratory motion correction for abdominal MRI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge