ReorientBot: Learning Object Reorientation for Specific-Posed Placement

Paper and Code

Feb 22, 2022

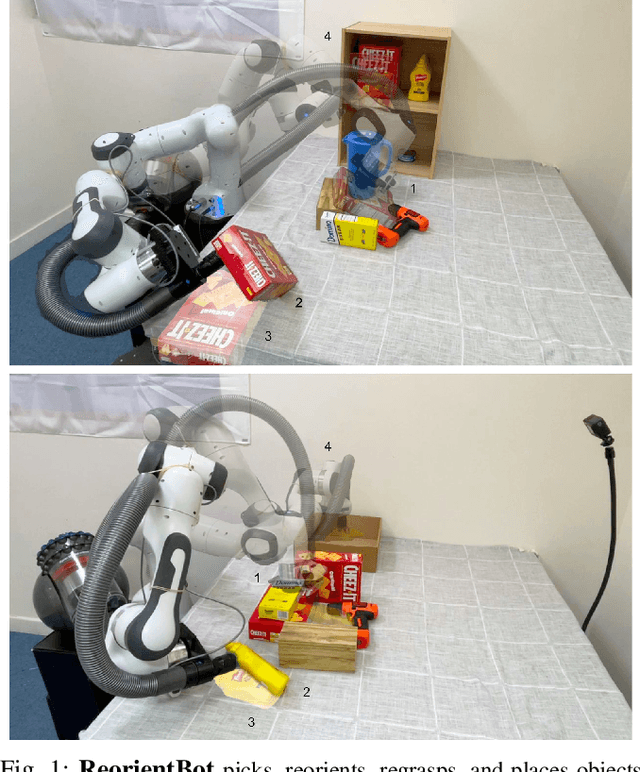

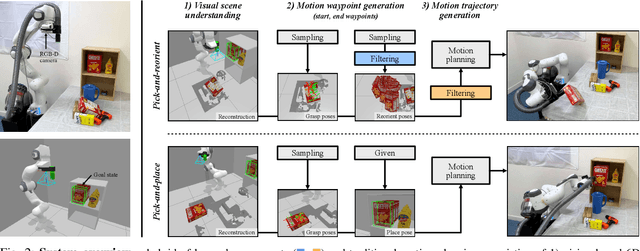

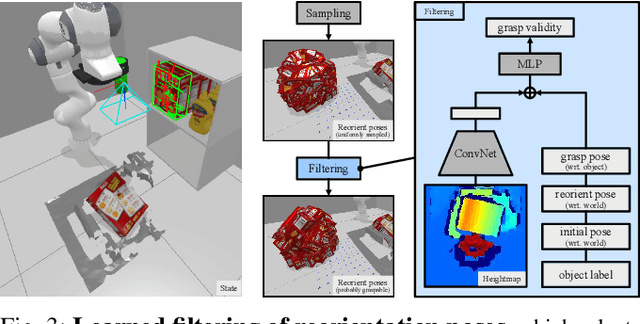

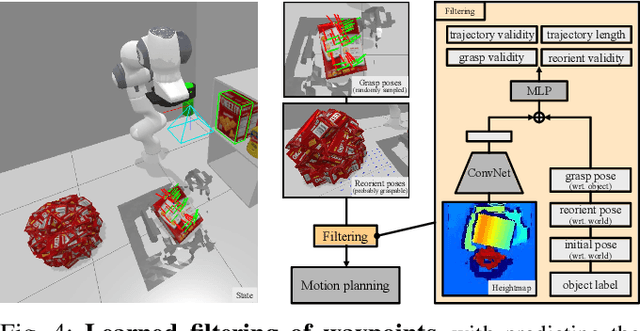

Robots need the capability of placing objects in arbitrary, specific poses to rearrange the world and achieve various valuable tasks. Object reorientation plays a crucial role in this as objects may not initially be oriented such that the robot can grasp and then immediately place them in a specific goal pose. In this work, we present a vision-based manipulation system, ReorientBot, which consists of 1) visual scene understanding with pose estimation and volumetric reconstruction using an onboard RGB-D camera; 2) learned waypoint selection for successful and efficient motion generation for reorientation; 3) traditional motion planning to generate a collision-free trajectory from the selected waypoints. We evaluate our method using the YCB objects in both simulation and the real world, achieving 93% overall success, 81% improvement in success rate, and 22% improvement in execution time compared to a heuristic approach. We demonstrate extended multi-object rearrangement showing the general capability of the system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge