Releasing Graph Neural Networks with Differential Privacy Guarantees

Paper and Code

Sep 18, 2021

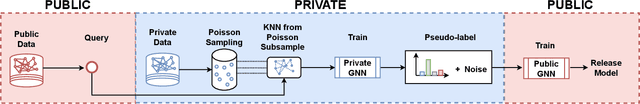

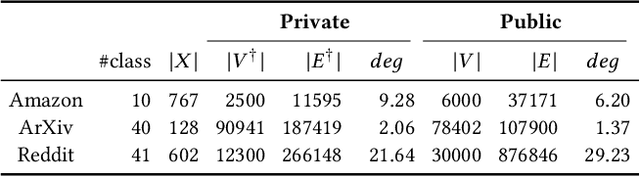

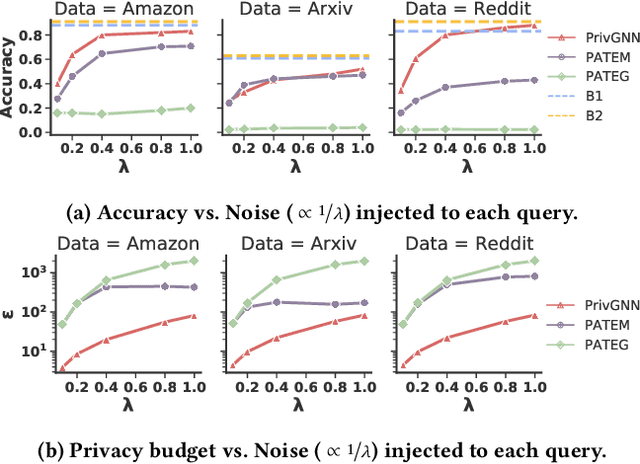

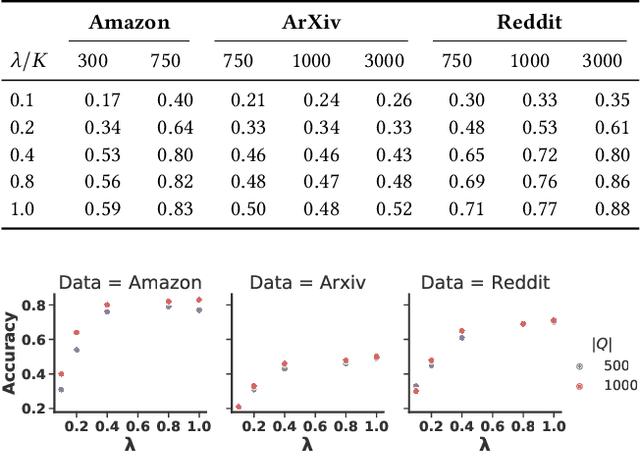

With the increasing popularity of Graph Neural Networks (GNNs) in several sensitive applications like healthcare and medicine, concerns have been raised over the privacy aspects of trained GNNs. More notably, GNNs are vulnerable to privacy attacks, such as membership inference attacks, even if only blackbox access to the trained model is granted. To build defenses, differential privacy has emerged as a mechanism to disguise the sensitive data in training datasets. Following the strategy of Private Aggregation of Teacher Ensembles (PATE), recent methods leverage a large ensemble of teacher models. These teachers are trained on disjoint subsets of private data and are employed to transfer knowledge to a student model, which is then released with privacy guarantees. However, splitting graph data into many disjoint training sets may destroy the structural information and adversely affect accuracy. We propose a new graph-specific scheme of releasing a student GNN, which avoids splitting private training data altogether. The student GNN is trained using public data, partly labeled privately using the teacher GNN models trained exclusively for each query node. We theoretically analyze our approach in the R\`{e}nyi differential privacy framework and provide privacy guarantees. Besides, we show the solid experimental performance of our method compared to several baselines, including the PATE baseline adapted for graph-structured data. Our anonymized code is available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge