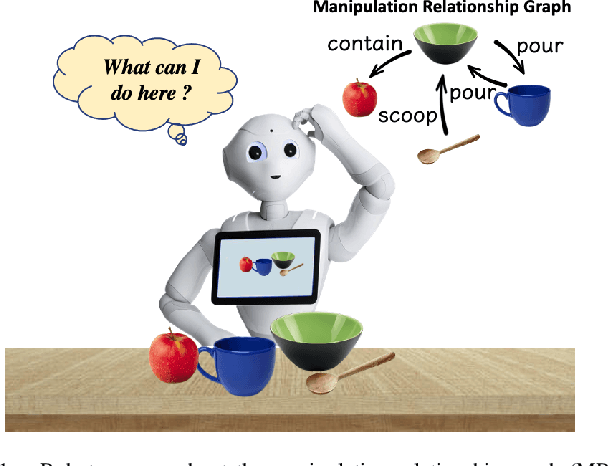

Relationship Oriented Affordance Learning through Manipulation Graph Construction

Paper and Code

Nov 01, 2021

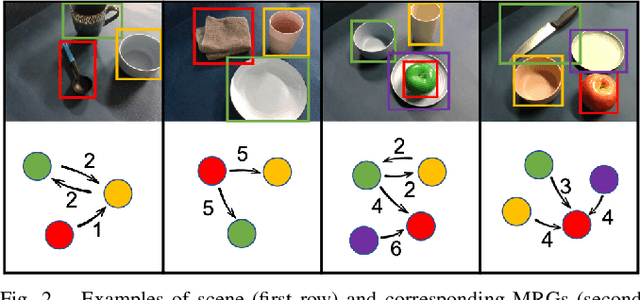

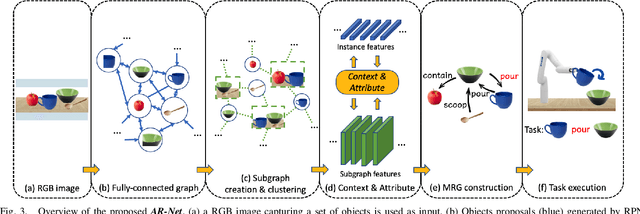

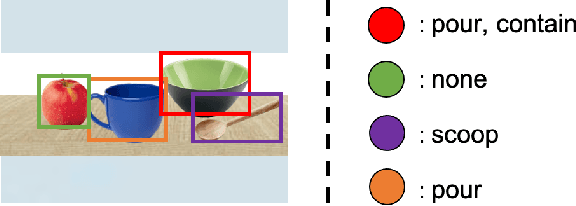

In this paper, we propose Manipulation Relationship Graph (MRG), a novel affordance representation which captures the underlying manipulation relationships of an arbitrary scene. To construct such a graph from raw visual observations, a deep nerual network named AR-Net is introduced. It consists of an Attribute module and a Context module, which guide the relationship learning at object and subgraph level respectively. We quantitatively validate our method on a novel manipulation relationship dataset named SMRD. To evaluate the performance of the proposed model and representation, both visual perception and physical manipulation experiments are conducted. Overall, AR-Net along with MRG outperforms all baselines, achieving the success rate of 88.89% on task relationship recognition (TRR) and 73.33% on task completion (TC)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge